Claude Opus 4.5 and Gemini App Upgrade | Weekly Digest

PLUS HOT AI Tools & Tutorials

Hey! Welcome to the latest Creators’ AI Edition. We hope your Thanksgiving week is going well.

The main character of this week is Anthropic with Claude Opus 4.5, but there is also Google’s Gemini app added interactive diagrams, OpenAI integrated Voice Mode directly into ChatGPT, FLUX 2 returned with ultra-realistic image generation.

But let’s get everything in order.

Featured Materials 🎟️

News of the week 🌍

Useful tools ⚒️

Weekly Guides 📕

AI Meme of the Week 🤡

AI Tweet of the Week 🐦

(Bonus) Materials 🎁

It’s Black Friday! Don’t miss it — this Friday only, get 15% OFF Annual Subscriptions. Limited Offer ⏳🔥

Featured Materials 🎟️

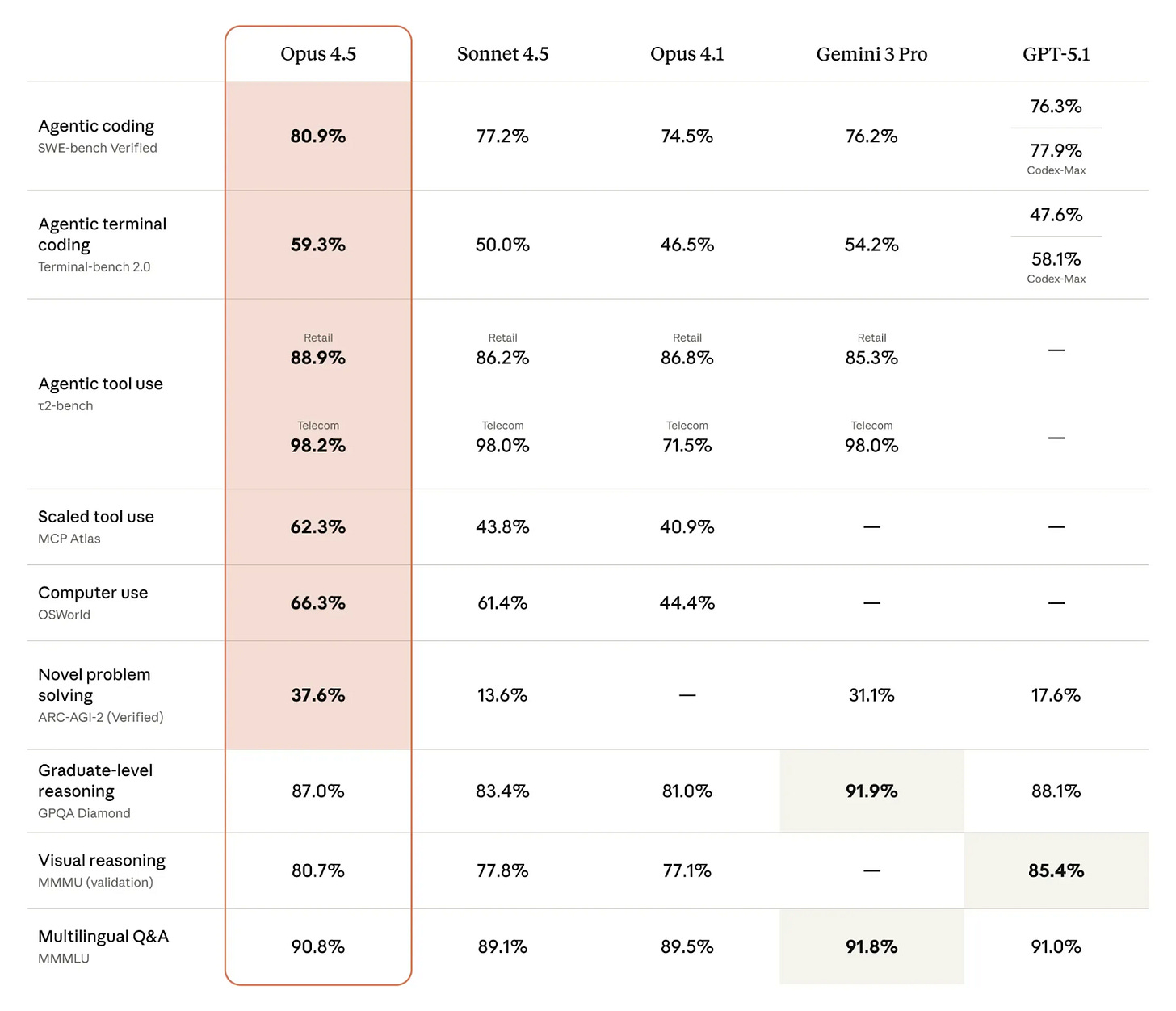

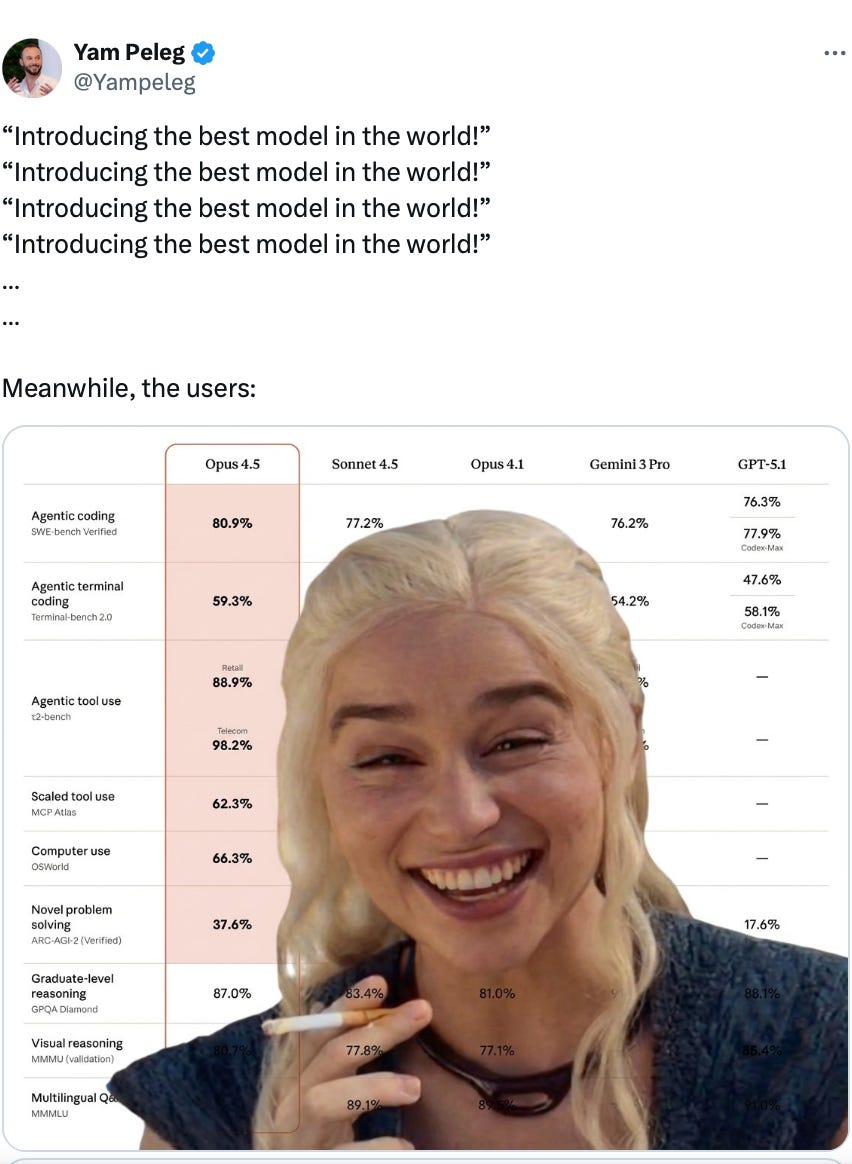

Claude Opus 4.5 is Here

Claude isn’t slowing down in the model race either (are we following AI F1?). Exactly a week after Gemini 3 dropped, Anthropic rolled out Claude Opus 4.5 on November 24.

Right now, the company calls it their most powerful Claude model for agentic workflows and coding. It’s mainly aimed at pro users, developers, and teams that need top-tier logic, precision, and more autonomy.

The main feature is that Opus 4.5 can control desktop apps through APIs and tools, interact with websites in the browser, and basically operate as a digital assistant inside your existing software setup.

What changed:

The token price dropped from $15 / $75 to $5 / $25 (literally three times cheaper than the previous Opus).

On medium-level reasoning, it uses 76% fewer tokens than Sonnet 4.5.

Added manual control of “thinking time”: low, medium, high.

Claude App now has context compression: old messages get summarized and moved into a fresh window without losing the thread.

Btw, Claude Code now works in the desktop app, and you can run tasks in parallel. Maybe it’s time to try the tool that’s been stealing hearts of devs and our subscribers.

On Anthropic’s internal exam (the one they give job candidates), Opus 4.5 scored higher than any human ever (and did it in just 2 hours). Also, it topped the SWE-bench Multilingual leaderboard, leading in 7 out of 8 programming languages, which makes it stronger than Sonnet 4.5 and most competitors when it comes to multilingual production code.

News of the week 🌍

Interactive Images in Gemini app

Google presented a feature that basically turns static study diagrams into clickable, live objects within the Gemini app. Students (the main target audience) can click on any part of a diagram (biological systems, for example) and get instant explanations.

It’s powered by Gemini’s visual AI, which auto-tags everything and gives context without any manual work. You can even ask follow-up questions right there and keep going deeper, all without bouncing around different screens.

Among all these photo and video generations, it’s a pretty cool move to make studying dynamic, and, most importantly, interactive, rather than just giving ready-made decisions.

Voice Mode Directly Within ChatGPT

No digest without OpenAI. The company launched an update integrating Voice Mode straight into the main chat interface on mobile (iOS/Android) and web, which means no separate screen or floating orb anymore. Now voice input/output blends with text.

When you speak, you can see real-time transcripts, scroll through message history, images, maps, charts, or code without breaking the flow.

How to activate: Update the app, start a chat, tap the microphone icon to the right of the input field. Hit “End” to finish.

For the old separate mode: Settings > Voice Mode > Separate mode. Available globally for all users.

FLUX is Back

Black Forest Labs rebuilt FLUX 2. The model is available on Hugging Face in two versions. FLUX.2 Pro is made for film, advertising, and fine art and focuses on realistic lighting, physics, and anatomy. FLUX.2 Flex is optimized for graphic design work with sharp colors, clean layouts, and text rendering.

They say the generation quality is on the same level with Nano Banana Pro.

FLUX 2 was trained from the ground up with 32B parameters, runs on Mistral Small 3.1 for better context understanding, outputs up to 4MP resolution, and demands 90GB VRAM (or 64GB compressed). Overall, this is built for servers and cloud setups, but you can try it in ComfyUI.

P.S. Black Forest Labs was founded in 2024 by Robin Rombach, Andreas Blattmann, and Patrick Esser – the same researchers who built Stable Diffusion. According to Artificial Analysis, FLUX.1 models already surpass Midjourney and OpenAI’s image generators in quality. Now with FLUX 2, they’re pushing the bar even higher.

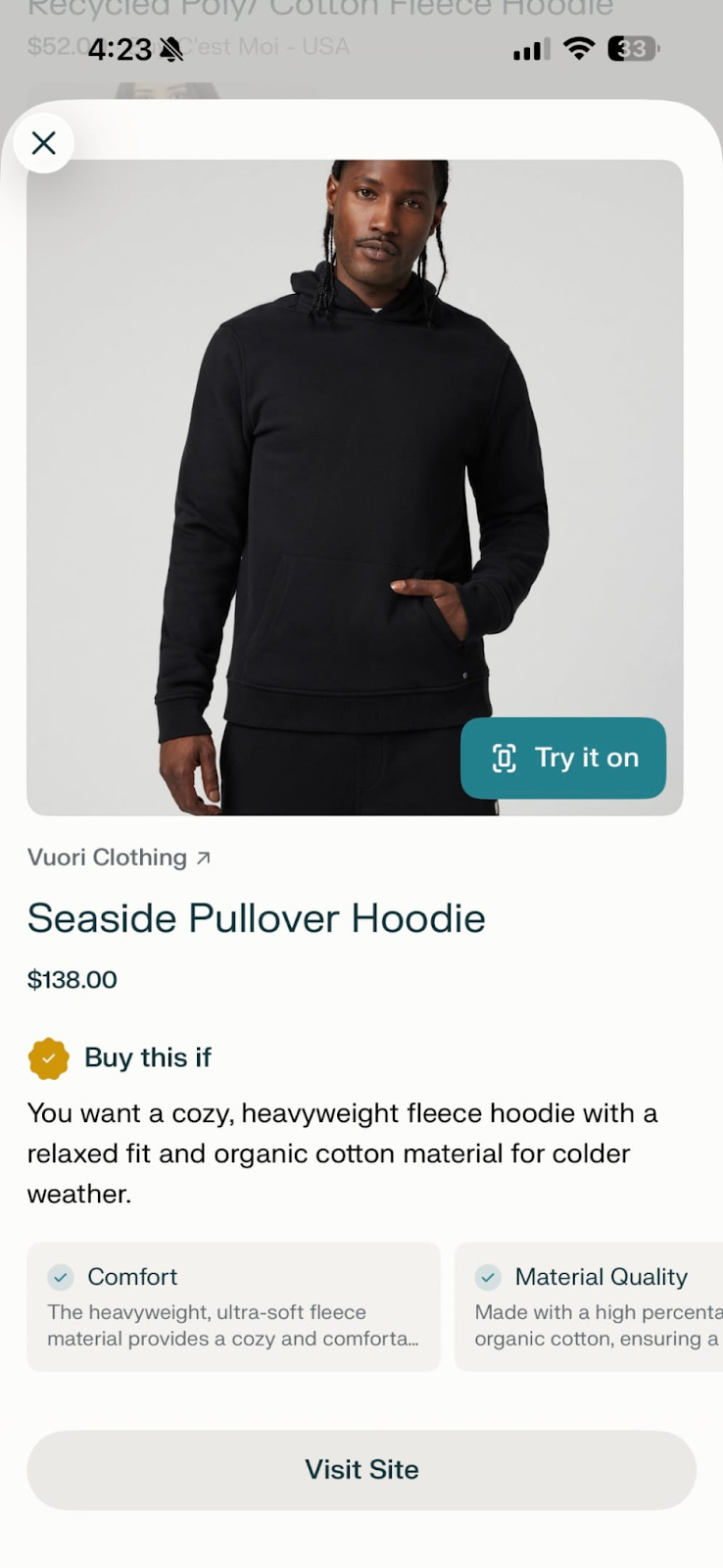

Virtual Try-On in Perplexity

Perplexity dropped the Virtual Try-On feature for all Pro/Max subscribers. You can upload a full-body photo, and AI builds a realistic digital avatar in under a minute (powered by Google’s Nano Banana Pro). When you search for clothes, a “Try it on” button appears and lets you see how the item fits your avatar in real-time.

There are even bonus features: Instant Buy with PayPal, comparisons, reviews, free shipping. It remembers your preferences from past chats for personalization.

Like this, Perplexity leveled up its shopping game. First came Snap to Shop (from photo to product matches), now it tackles one of e-commerce’s biggest pain points – returns (which hit around 30% industry-wide).

Warner Music Flips on AI Music

It seems that someone big is ready to cooperate with AI. Warner Music Group settled its copyright lawsuit with Suno on Tuesday and struck a licensing deal (a major shift from last year’s “sue first” approach). The partnership will launch licensed AI music models in 2026, with WMG artists getting full control over how their names, voices, and music are used in AI-generated content.

This comes a week after WMG settled with another AI music startup, Udio. Btw, last year, WMG, Universal, and Sony all sued Suno and Udio for copyright infringement.

Useful tools ⚒️

nao - AI data IDE for 10x faster data work

Questas - Build interactive stories with AI images and videos

Klariqo AI Voice Assistants - AI Voice assistant in 3 minutes. Built for non-developers

Raydian - The next frontier of AI product builders

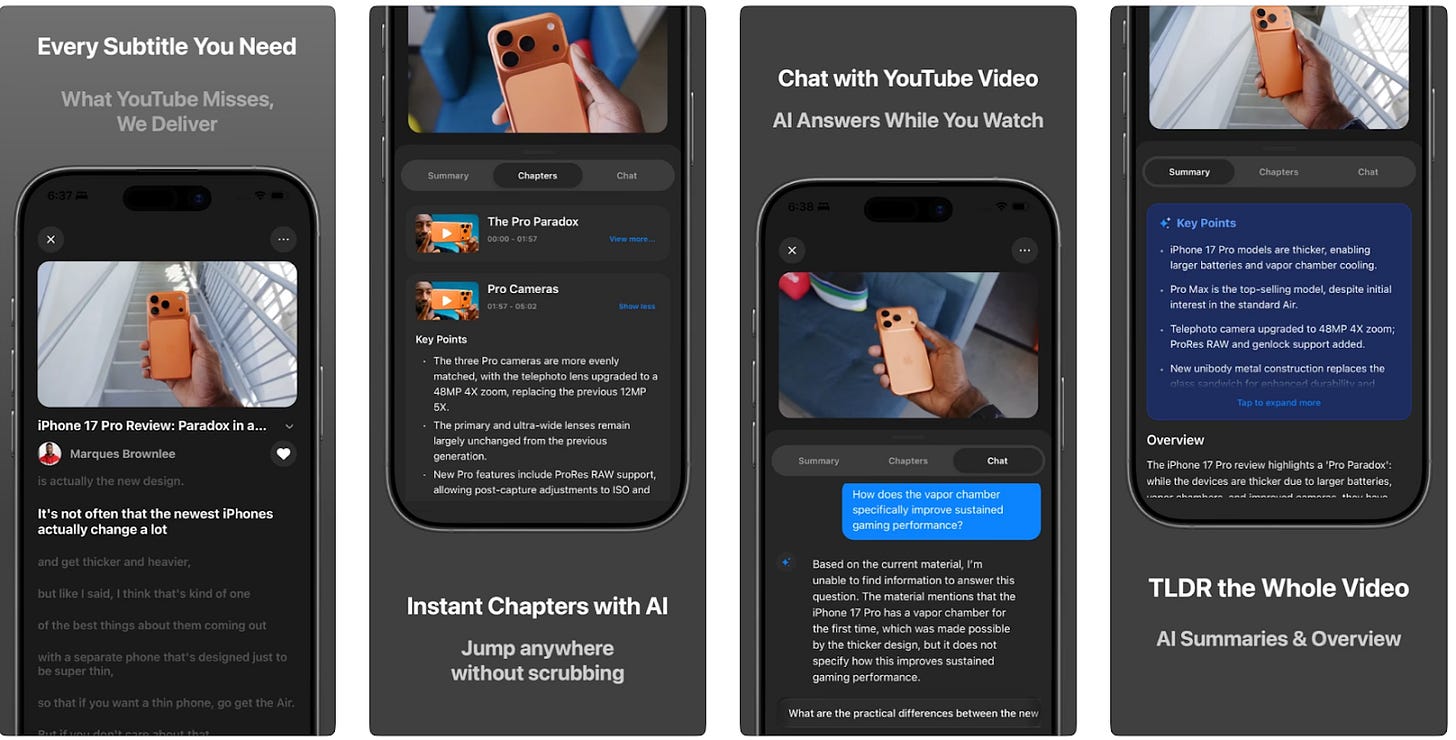

InsightTube - AI insights from YouTube — fast, clear, effortless.

InsightTube auto-summarizes videos, chops them into chapters, translates subtitles on the fly, and lets you chat with the content instead of rewatching. Two devs built it because they were tired of long videos eating their time.

Weekly Guides 📕

Why Notebook LM Is The Best AI Research Tool Right Now

Google Antigravity Tutorial: Build a Finance Risk Dashboard

Nano Banana PRO tips! and 50 creative Prompts You have to Try

Google AI Studio Tutorial...Beginner to Expert in 10 Prompts

AI Meme of the Week 🤡

AI Tweet of the Week 🐦

Bonus Materials 🎁

The Thinking Game | Full documentary | Tribeca Film Festival official selection - To learn about the journey of Google DeepMind CEO Demis Hassabis on his path to the Nobel Prize.

Hybrid Quantum Transformer for Language Generation - To read about the first quantum LLM made by the Chinese.

The 3D model - To play with a 3D model that shows how an LLM works

Why the Al Bubble Debate is Useless - To listen

The Future Belongs to Product Builders - To learn AI Coding with always up-to-date video lessons to shape the professional future you want.

See you next week! Enjoy the long weekend and don’t forget to upgrade your subscription during BFCM

Love how the focus is shifting from raw model power to usability and control.

Interfaces are finally catching up with how humans actually think !