ComfyUI: Free Node-based AI Workflow Tool

Practical Examples and Setup Advanced Image & Video Generation

Hello there!

AI-generated pictures and videos are everywhere, and it literally takes me a couple of minutes to whip something up for my projects.

But why create random images when you can build efficient workflows? You know that professionals don’t mess around, they build systems to make their processes deeper and more consistent. And in this guide, you’ll learn to use AI to create professional AI content (yes, like this below), and ComfyUI is the main character here.

In this piece, we:

Show what cool things you can create with ComfyUI

Explain what Comfy UI and node-based tools are

How can you embed it into your working workflow

Give an account of how to set up ComfyUI

Let’s go!

If you’ve read our FloraAI post, you’re probably already aware of node-based tools. ComfyUI is in the same league, but it is free and open source! It means that your creation process has zero limits. Look at what it can do!

Why you should try node-based ComfyUI

You know that annoying thing where you misspell one word in your prompt and the whole image comes out wrong? Then you’re stuck rewriting it again and again, getting random results with zero control over what’s actually happening.

Creators handle this with ComfyUI. It’s for when you need something super specific and you’re tired of guesswork. All pictures, prompts, and ideas are in front of you.

Maybe you’re already tired of this phase (😅), but you still don’t need coding skills to start. The key feature is workflow building.

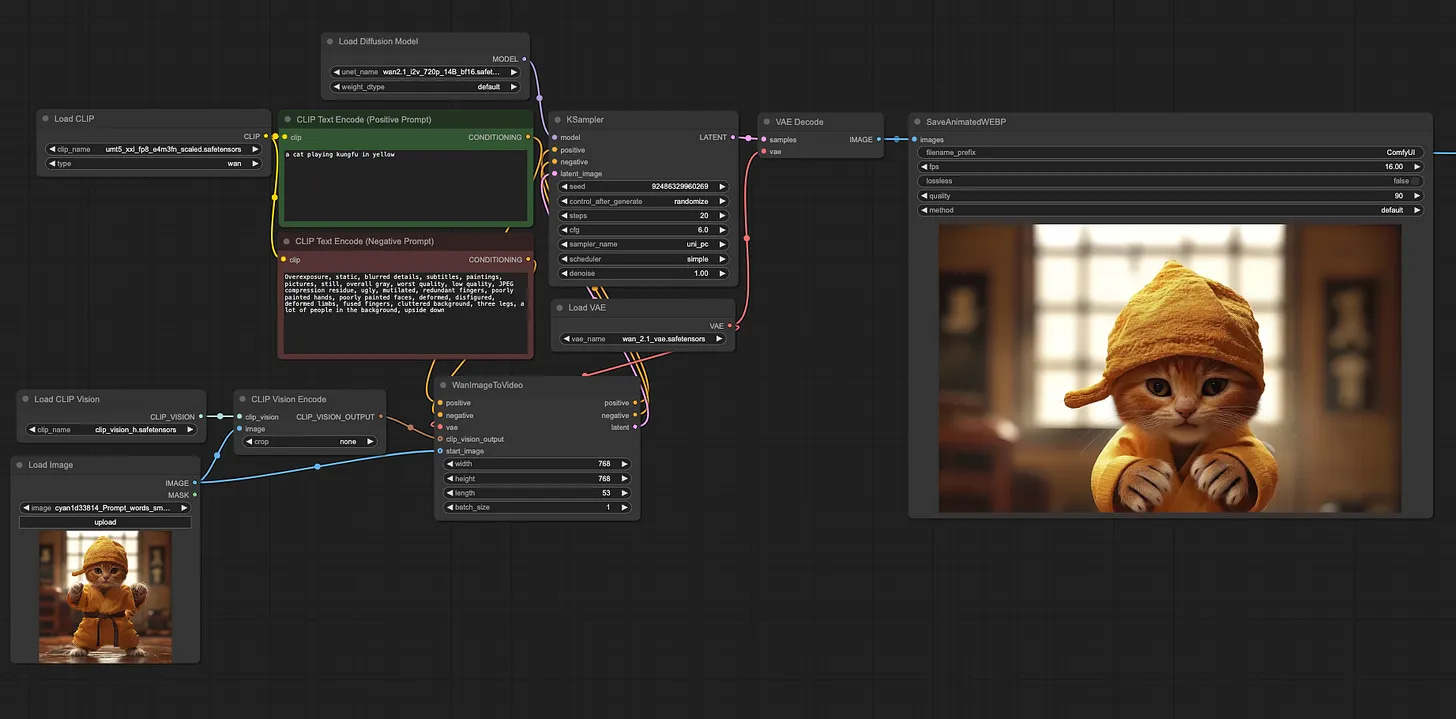

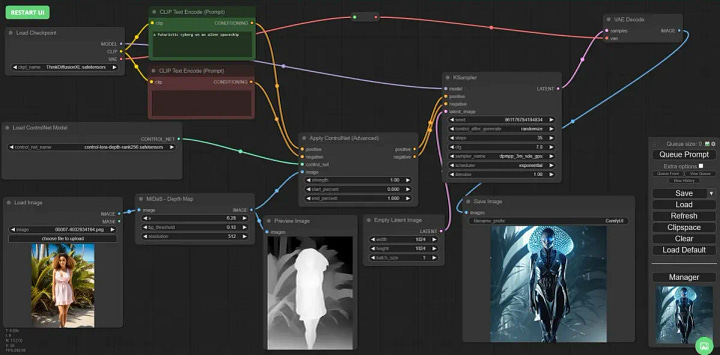

How does it work? Instead of a chat interface, buttons, and sliders, it uses a visual node editor (as you see in the picture). You drag and connect nodes from text to settings, from settings to picture to build workflows.

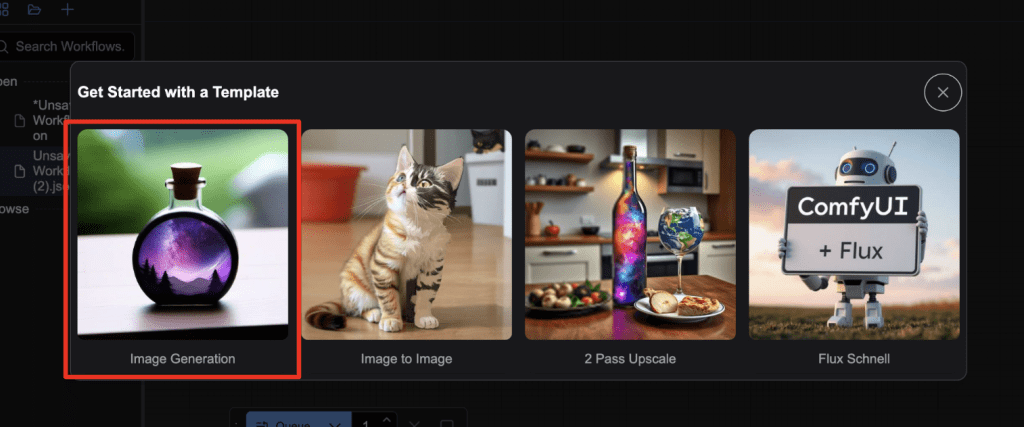

It is not that scary, because that’s you who is in charge of the process, and you can make simple text-to-image setups or complex multi-step pipelines for image and video generation, processing, and analysis. What is more, there are already a lot of ready-to-use templates.

It’s open!

As I already mentioned, it’s absolutely free and accessible for everyone. It runs on your PC using your GPU. You work with big AI models like Stable Diffusion, Wan, Flux, and others more without waiting in cloud server queues. Limits that online services have, such as generation caps, VRAM restrictions, speed throttling, and paywalls, don’t really bother you.

ComfyUI puts you in the driver’s seat with mix control points, swap models mid-process, handle video, images, and analysis all in one workflow.

So basically, ComfyUI stands out with:

Node-based workflow, where every part of the generation process is a node, and you can combine them however you want. The nodes show you exactly how the model works: what gets processed, when, and how. This helps you understand what’s happening under the hood.

You can experiment with prompts, tweak processes, and modify images and videos however you want, all in one place.

Integration with CUDA: you can use a GPU to speed up the generation process.

There’s a big community with tons of ready-made workflows and custom nodes. Workflows work like code: you can export them, share them, and even run them programmatically.

Are there alternatives?

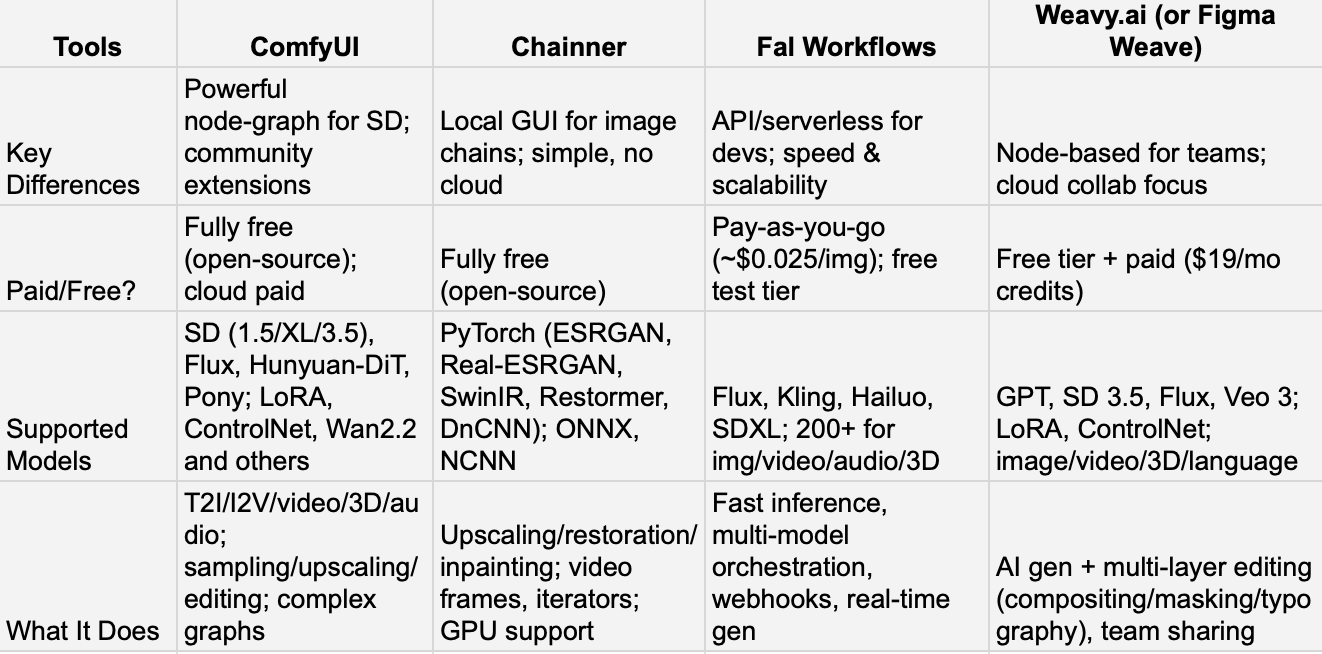

Pretty much, ComfyUI’s competitors either borrow bits of its node-style logic or aim for more automatic workflows. For example, Fal.ai runs in the cloud. You use their API and pay per call, which works well for teams or devs who don’t want to handle hardware. Weavy is built around shared workflows, and it gives you a visual node interface in the cloud so your whole team can build together. Chainner is more niche, because it’s focused on upscaling and restoration.

Personally, ComfyUI is its own thing. You get tons of freedom, and you run any SD-style model on your machine, but it feels like taking a content creation course.

You can pick the one that fits how you work. ComfyUI takes time to learn (totally more than 30 min), because everything is manual, but once you get it, it feels unlimited.

Is ComfyUI a great fit for you?

Of course, as I said, everyone can use ComfyUI, but not everyone needs it. It works out for:

AI artists and marketing teams are able to experiment with models, build complex generation chains, manually mix nodes and parameters, and create custom pipelines. If you seek simplicity and minimal learning time, don’t even start. It may drain you.

Business researchers and developers. As ComfyUI supports extensions and custom nodes, you can add 3D environments, mask tools, multi-layer editors, and API integrations. This lets you handle big projects and visualize exactly what you need. What is more, it protects the privacy of projects, because ComfyUI runs completely locally.

Collaborative teams take advantage of the ability to save, share, and reuse workflows and templates. This lets teams organize work, train members, and reproduce processes by standards.

What we can use it for

Let’s explore more of what ComfyUI can do. Below, we’ll cover its main elements and how to set them up for beginners to get started comfortably.

ComfyUI for Marketing and Sales

Everything in ComfyUI screams “use me for marketing”. You can swap backgrounds, set up lighting, fix colors, clean up details, add shadows, and nail a brand’s look without messing around with random prompts. Works great for product shots, ads, banners, and quick tests before a campaign. So, dear marketers and online sellers, it’s worth considering.

ComfyUI for all types of designers

You can crank out dozens of character variations, outfits, architectural stuff, art styles, and go on, just by changing your prompt. And all this is thanks to LoRA (actually, previous marketing examples were made with LoRA as well).

It’s a little add-on thing that teaches (in nerd terms, fine-tunes) your AI models specific styles, details, and tweaks you need. The thing is that without LoRA is complicated to get precise details (like a specific pattern on clothing or exact character features).

Ultimately, it can speed up anyone working with visuals:

Game devs can create quick NPC variations and early concept drafts

Fashion designers can test silhouettes, outfits with quick try-ons

Animation artists can try different character looks and props

Product designers, you should also take notes, because you can preview shapes, colors, and materials in a few steps

Wan model – For social media videos and movie creations

Wan is an open video generation model from Alibaba. It creates videos, animations, and images from text. All in all, with the right models (for example, these videos are made with Wan 2.1 VACE), you can maintain high control over movement, style, lighting, and objects.

Among other features:

Inpainting using a reference Image (when you have an image that you like, but you want to change only certain parts, inpainting is the best method.)

Outpainting (this way, you can broaden the content in the edge area of the original image)

Multi-Step Pipelines (сombine several nodes for effects like denoising, upscaling, or style transfer in one workflow)

How to start with ComfyUI

So, we have to download ComfyUI here. Below, we will grab all the internal components.