Gemini 3 Flash, GPT Image 1.5 and more | Weekly Digest

PLUS HOT AI Tools & Tutorials

Hey! Welcome to the latest Creators’ AI Edition.

This week, Google quietly pushed Gemini 3 Flash into everything from Search to developer APIs, OpenAI rolled out GPT Image 1.5, and Cursor blurred the line between IDE and design tool.

But let’s get everything in order.

Featured Materials 🎟️

News of the week 🌍

Useful tools ⚒️

Weekly Guides 📕

AI Meme of the Week 🤡

AI Tweet of the Week 🐦

(Bonus) Materials 🎁

From our partners:

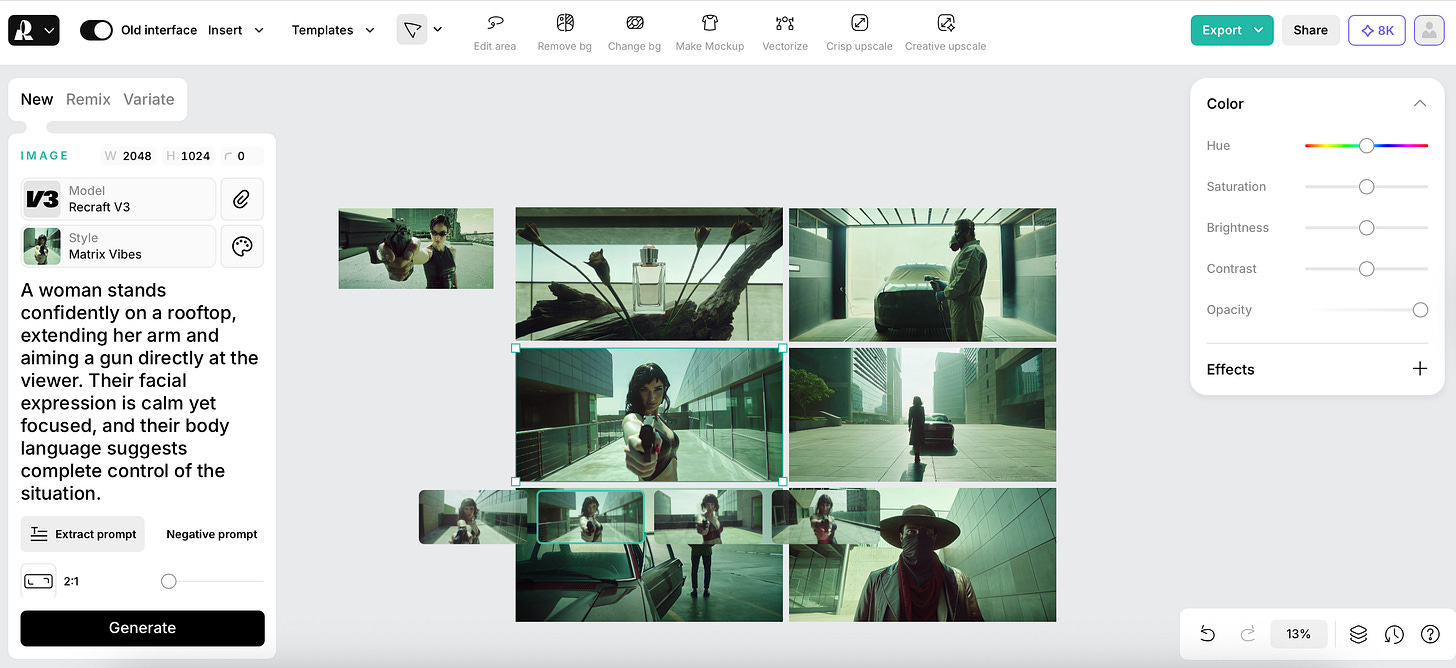

Recraft

Recraft is one of the best image generation models out there that comes with its own design tool, trusted by brands like Ogilvy, HubSpot, Netflix, Asana, Airbus.

There, you can create marketing‑ready visuals with precise color control, vector-ready assets, custom brand styles.

The platform removes friction from consistent tasks so anyone can generate visual content from text fast without any design experience.

Bring your ideas to life instantly!

Featured Materials 🎟️

Gemini 3 Flash is here

Did you notice all those posts with ⚡ emojis from Google accounts on Twitter? Yeah, that was their quirky way of announcing the new model.

Google rolled out Gemini 3 Flash, and despite the “Flash” label, this is not considered a lightweight model in the usual sense.

The model understands photos, video, and voice.

Uses the same architecture as the Pro version. So when it thinks through stuff, it catches all the subtle nuances in your prompts and gives detailed answers, but faster.

Designed for agentic workflows, multimodal understanding, and everyday reasoning tasks.

Max input context: 1,048,576 tokens.

It replaces Gemini 2.5 Flash as the default fast model inside Google products in two modes: Fast and Thinking.

Gemini 3 Flash is being deployed globally across:

Gemini app

AI Mode in Google Search

Gemini API (Google AI Studio, Gemini CLI)

Vertex AI & Gemini Enterprise

New agentic dev platform Google Antigravity

Btw, the model beats Gemini 2.5 Pro across many benchmarks, while running faster and cheaper.

Notable benchmark scores:

GPQA Diamond: 90.4% (PhD-level reasoning)

Humanity’s Last Exam: 33.7% (no tools)

MMMU Pro: 81.2% (multimodal reasoning, nearly at Gemini 3 Pro level)

Also, it uses 30% fewer tokens on average than Gemini 2.5 Pro on real traffic.

Real-world observations from users:

Writes simple programs nearly on par with Gemini 3 Pro.

In some cases performs better than Pro on practical coding tasks.

Can fully analyze long videos (up to ~50 minutes):

Understands on-screen actions, not just audio

Tracks characters’ movements

Reconstructs walking routes using visual cues and maps

What is more, Gemini 3 Flash costs four times less than Gemini 3 Pro in API pricing.

News of the week 🌍

GPT Image 1.5

New image generator in ChatGPT. GPT Image 1.5 works 4x faster, preserves details better, and follows prompts more accurately, according to the devs.

Renders text better. Less of that characteristic yellow tint, but they didn’t manage to get rid of it completely.

For all the details (and more), check out our new post. We even compare GPT Image 1.5 with Nano Banana Pro:

Side note: Have you seen?

Journalists are making provocative predictions that OpenAI might not survive 2026. The startup has no clear product strategy, costs per request are massive, and belief that current language models will lead to AGI is fading. The bubble could burst, and the company will either go bankrupt or get acquired by Microsoft.

What do you think about this?

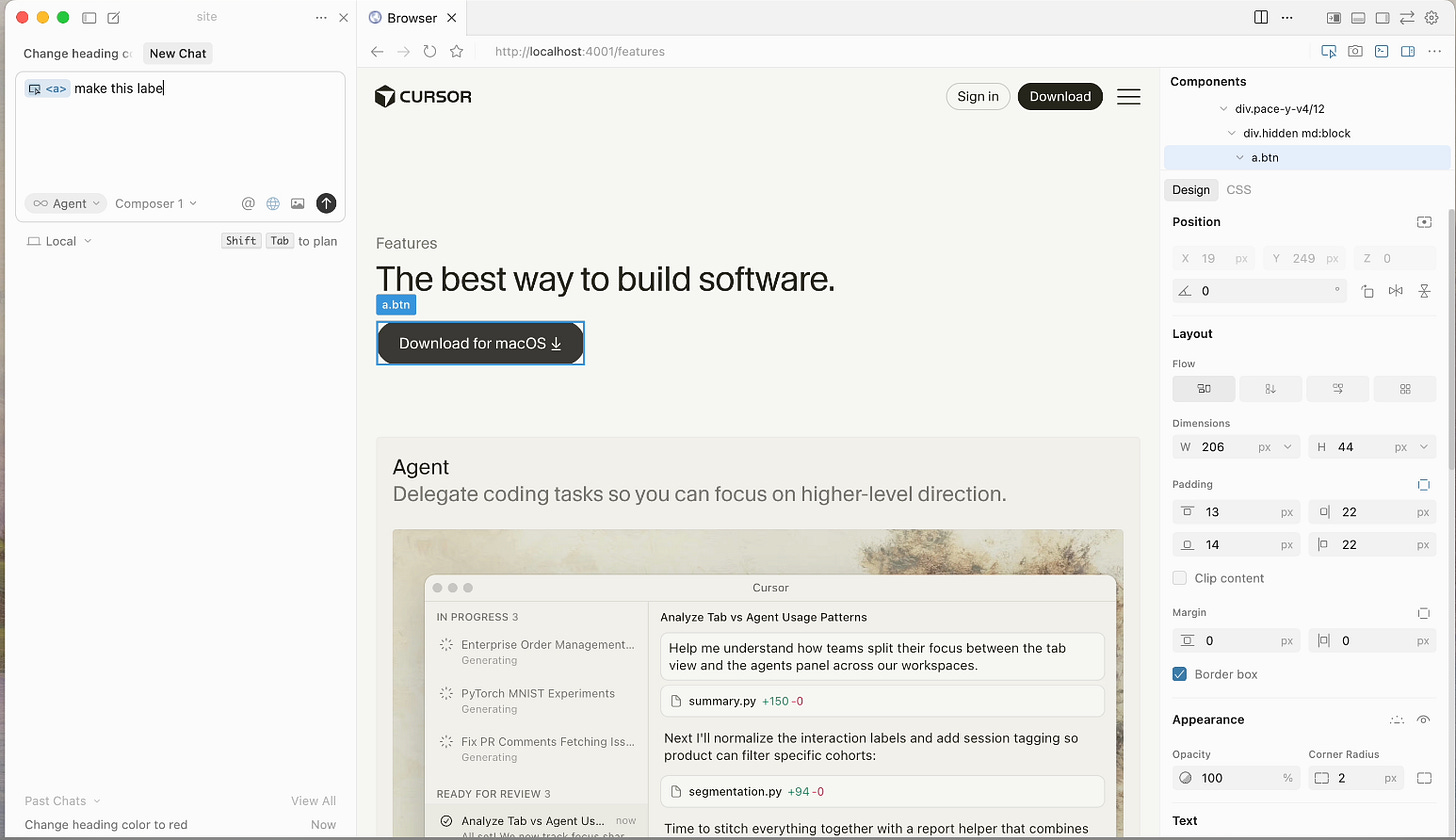

Cursor Now Has a Visual Editor

Cursor’s Visual Editor — a new killer feature in the AI-IDE Cursor.

Interface: on the right, a classic design panel (like Figma): sliders for fonts, colors, padding, border radius, letter spacing, grid, and menu positioning.

AI assistant on the left: you can click any element, describe what you want, like “make the button red”, and Cursor AI changes it in real time.

All changes show up immediately in Cursor’s embedded browser. Works with real site data, constraints, and interactions.

We’ve talked about Cursor’s capabilities multiple times, so I like that they’re making it more versatile. It’s definitely no longer just a tool to lift the manual coding burden off programmers.

A Powerful Duo

Google integrated Opal (a Vibe-Coding tool for no-code mini-app creation) directly into the web version of Gemini.

How to launch Opal in Gemini:

Open gemini.google.com → Gems side panel (left sidebar).

Choose Opal from ready-made Gems or click “Create new Gem” → Opal.

Describe your app: “Create a budget tracker: take income/expenses → show graph + saving tips”.

What a new Visual Step Editor brings:

Step-by-step view: your request breaks into blocks (prompt → model → tool → output). You can drag, edit, and rearrange.

There are also a bunch of templates and ready workflows (budget tracker, content generator, meeting notes).

Advanced Editor for complex flows.

3D‑model TRELLIS

Microsoft dropped a new neural network that generates 3D models from images.

Nails details and textures from the reference.

Size is around 4 billion parameters. Compact enough to run locally on GPUs with 24 GB VRAM.

It generates 3D assets up to 1536³ voxels with full PBR texturing.

Reproduces details and textures from the reference really well.

Key tech:

O-Voxel / Omni-Voxel: their own sparse 3D format that encodes both geometry and appearance (color, materials) at the same time. It handles arbitrary topology like open surfaces, complex shapes, and sharp edges.

The solution is built on a high-performance pipeline that converts data from 2D to 3D using a structured latent space and 3D VAE. This keeps the representation compact and the accuracy high.

This 3D VAE with 16x downsampling compresses a 1024³ scene down to about 9.6K latent tokens with almost no quality loss. This explains how it even runs on 4B parameters and a 24 GB GPU.

What it outputs:

Full PBR mesh: albedo, roughness, metallic, opacity/alpha (ready for rendering right away).

Supported formats: GLB, OBJ, FBX, STL, plus radiance fields and 3D Gaussians for neural rendering/real-time splatting.

Grok Voice Agent API

xAI launched the Grok Voice Agent API, built on the same stack powering Grok Voice in millions of Tesla vehicles and mobile apps.

It ranks #1 on Big Bench Audio for intelligence and delivers time-to-first-audio under 1 second, nearly 5x faster than competitors. The API uses a voice-to-voice architecture that processes speech directly with paralinguistic features like laughter and sighs, and achieves <700ms end-to-end latency.

The API supports 100+ languages with native-level proficiency and automatic language switching mid-conversation.

It also offers multiple voices (Ara, Eve, Leo) that excel at domain-specific terminology in healthcare, finance, and legal fields.

Pricing is flat at $0.05 per minute, undercutting alternatives like OpenAI Realtime API and ElevenLabs.

Developers get a tool called for web search, X search, and custom functions, plus emotional tone control.

Useful tools ⚒️

NexaSDK for Mobile - Easiest solution to deploy multimodal AI to mobile

xPrivo - Open Source, Free Anonymous AI Chat - Ready to Run Locally

Loki.Build - Design and ship studio-grade landing pages with AI

Voice Mate - AI-powered Voicemail

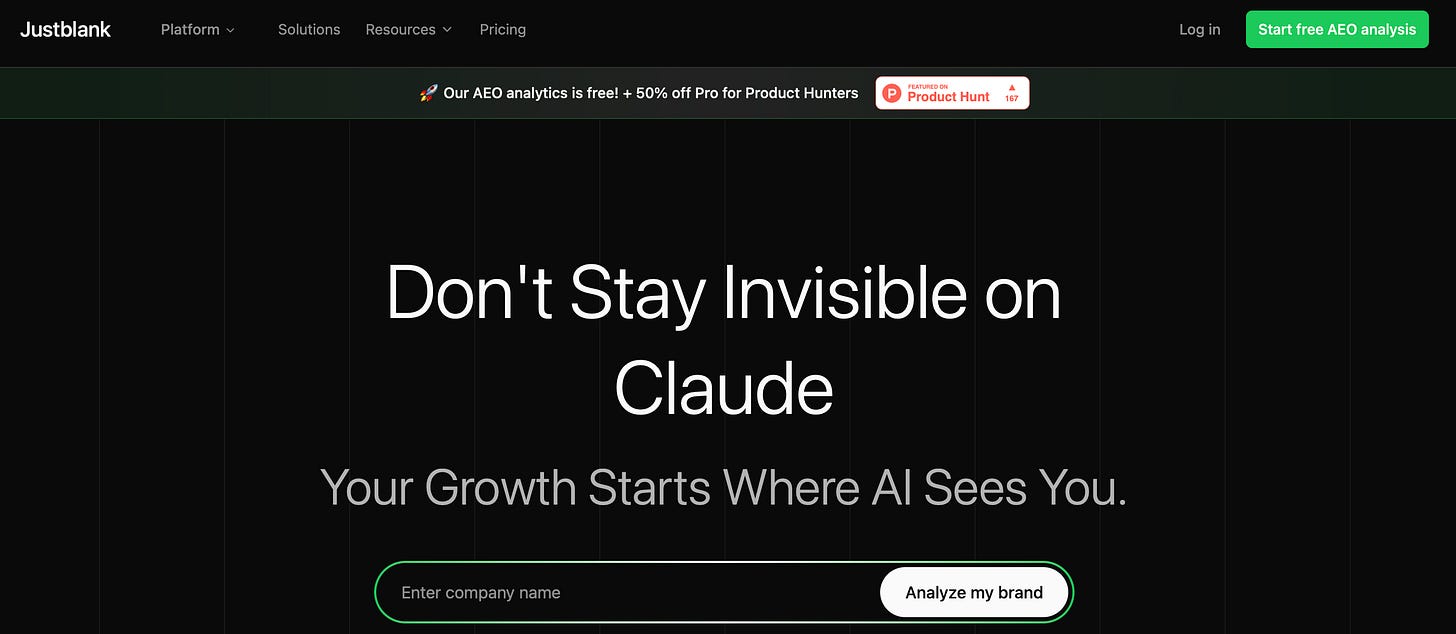

Justblank - Free AEO analytics with content tools to boost AI rankings

JustBlank shows you how AI models like ChatGPT, Claude, and Gemini mention your brand in their answers and compares you to competitors so you know where you’re winning or losing. You see which prompts trigger your name, which competitors show up instead, and which topics get the most volume. Then you create AEO-optimized content directly in the tool to increase your chances of appearing in AI answers. It's free to start and built for marketers who don't want to disappear as AI becomes the new search.

Weekly Guides 📕

Build a Shopify Store In 5 Minutes With A.I. (2026 Beginner Guide)

316: The Practical Guide to AI in HubSpot

Google AI Studio Tutorial: Complete Guide to Chat, Build, and Stream Modes

The Realistic Guide to Mastering AI Agents in 2026

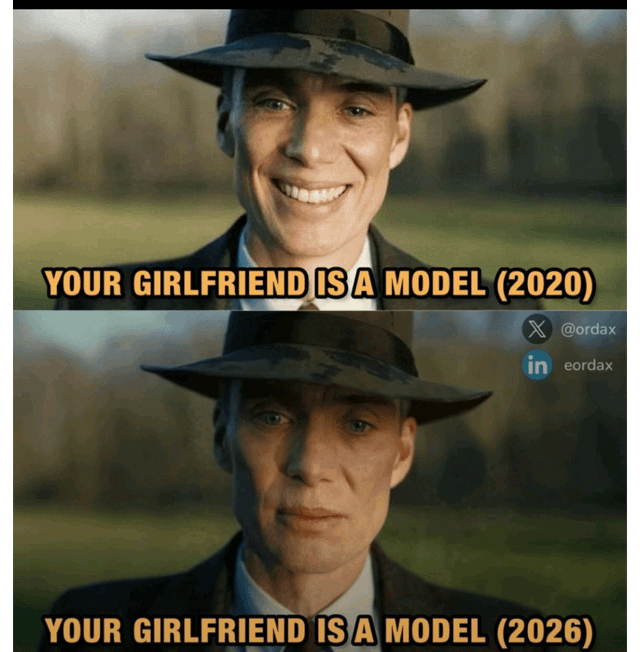

AI Meme of the Week 🤡

AI Tweet of the Week 🐦

Bonus Materials 🎁

Stanford AI Experts Predict What Will Happen in 2026 - to read what Stanford faculty say about what the year ahead in AI will be marked by (stricter standards, deeper transparency, and a long-awaited shift toward practical, real-world impact).

The end of OpenAI, and other 2026 predictions - to listen

AI finds a hidden stress signal inside routine CT scans - to read

8 Million Users’ AI Conversations Sold for Profit by “Privacy” Extensions - to read about a massive AI privacy breach

Looking forward to getting into Recraft and another 3D image from text generator mentioned here. Thanks for the absolute wealth of information.

flash has been so good :) really happy with it!