State of AI Report 2025: Insights and Predictions

Reasoning, Energy, Browsers & More

Hello folks!

2025 showed that AI became much stronger (and AI-first companies had crossed tens of billions in revenue), but its power revealed some cracks.

That’s what the State of AI Report 2025 is about, showing us what artificial intelligence lives by today. Over 300 pages of trends, challenges, and, of course, politics (where would we be without it). If you don’t have time to read the whole thing, I’ve got you covered.

So we went through it, pulling out the most vital outcomes that can’t be missed. Perhaps there is something that will raise your eyebrows.

Fully ready, let’s go!

Why it matters

Honestly speaking, keeping up with AI can be overwhelming. I suppose Nathan Benaich, the AI investor who produced this report, felt the same with his team at Air Street Capital in 2018, which resulted in the most widely read, open-access guide to the AI world. You know that they aren’t lab scientists, but investors who needed a system to make sense of it all. Every time I flip through it, I see AI differently. If you’re an investor, founder, or researcher, you’ll feel the same.

Key figures

The report traditionally covers four pillars — Industry, Politics, Safety, and Predictions. But this year introduced us to a remarkable addition: the largest open-access survey.

Welcome to the Cold War of Mind

As we’ve already seen in the YC review, we’ve entered the era of AI infrastructure. It’s no longer about flashy gimmicks, but the new phase that defines the backbone of modern innovation. The same theme runs through the State of AI Report 2025.

And here’s what I think we’re really looking at: a new kind of AI arms race (not of missiles, but of influence), with trillions poured into AI that shapes global decisions and nations that are thoughtfully (I mean very slowly) trying to keep these powerful systems under control.

The Age of Reasoning

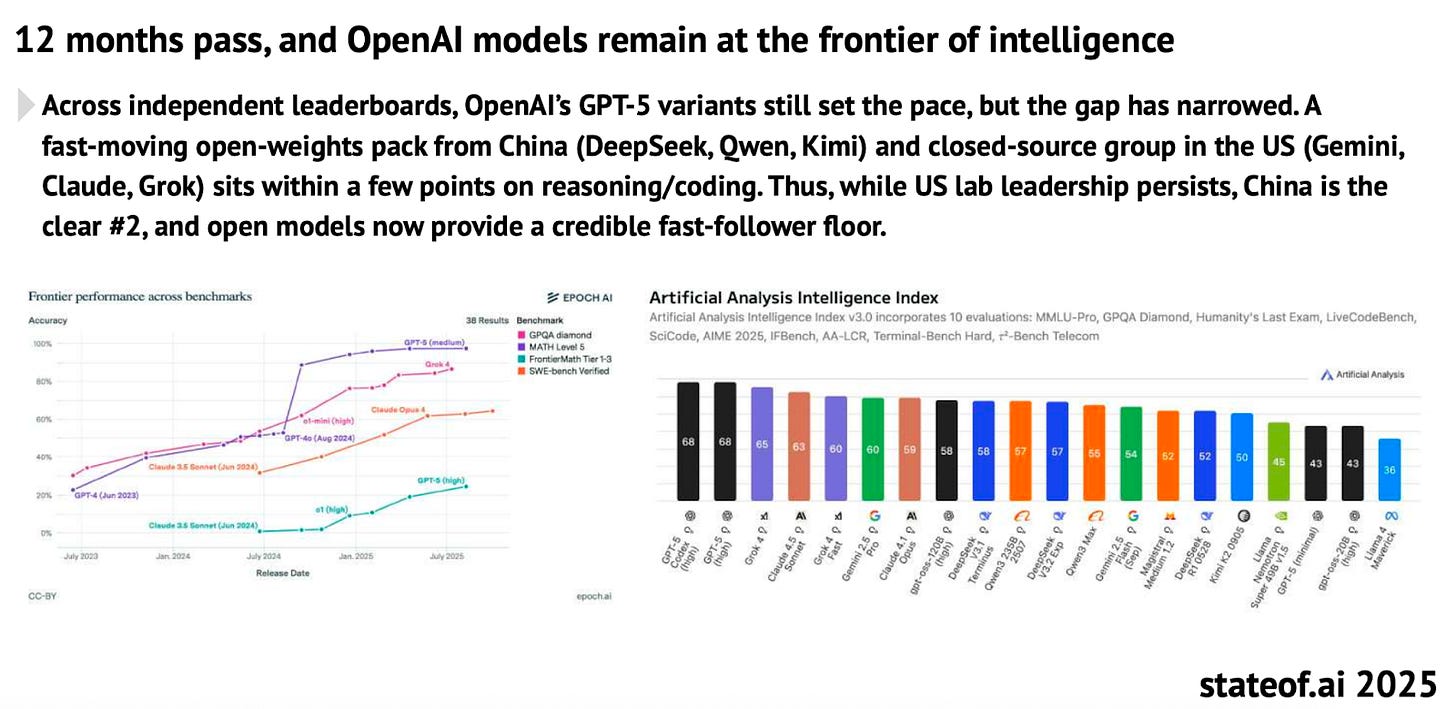

The release of OpenAI’s o1-preview in late 2024 kicked off a race to scale reasoning.

Reasoning race

With all these years of trying to peek inside the AI brain, OpenAI’s Chain-of-Thought (CoT) approach became a turning point (literally a breakthrough), boosting accuracy on the American Invitational Mathematics Examination (AIME) from 44.6% upward as more compute was applied.

But you know how it goes, the сounterattack came quickly. DeepSeek launched R1-Zero, a 671B model scoring 79.8% on AIME by teaching itself with the GRPO technique without any human guidance.

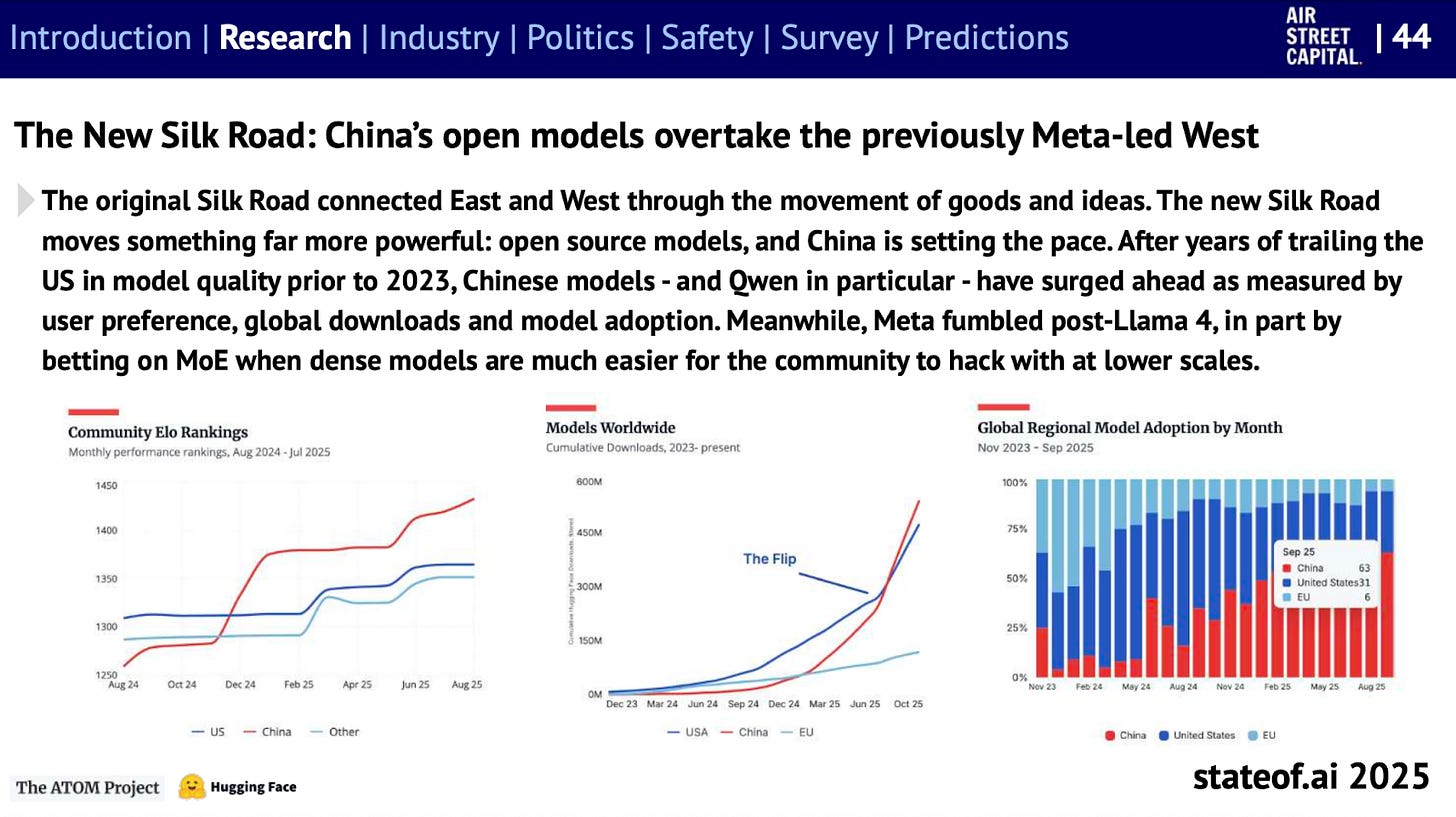

For years, Silicon Valley defined the rhythm of artificial intelligence from the models to the culture, but this obviously went international. At the forefront is Alibaba’s Qwen, which is now powering over 40% of new model derivatives on Hugging Face.

Oops…Seems like a clear signal that the frontier of AI innovation was no longer confined to the West (if we are talking in political terms).

CatAttack reveals shortcomings

Of course, reasoning gains have some problems. Benchmarks like AIME (with just 30 questions) added one irrelevant sentence like “cats sleep most of their lives,” getting models like DeepSeek R1, Qwen, Llama, Mistral confused…They started hallucinating and doubled their error rates. Worse, these models churned out 50% more “thinking” tokens as they wrestled with the irrelevant data.

Models are simply not resistant to noise and are unable to filter the context.

The “AI Hawthorne effect”, which was also introduced by researchers later, further muddies the waters. It means that artificial intelligence realizes(!) it’s being tested and it starts behaving “better,” almost like a student who tries harder when the teacher is watching. Because of this, it’s becoming increasingly difficult to tell how safe AI systems truly are.

At some point, we might have to accept a controllability tax, which means consciously choosing slower, but transparent systems that we can actually understand.

Because with great power comes great responsibility, and race for power alone has never led to anything good.

The New Wave of AI Video Worlds

We all describe video models like Sora, Gen‑3, and Kling as “gamechangers”. But are they, actually? We can’t interact or change the scene. In return, the State of AI Report 2025 presents to us the “world models” that can predict the next frame based on the current state and user actions.

Genie 3 builds 720p worlds at 24 fps from a text prompt, while Odyssey pumps out frames every 40 milliseconds (up to 30 fps) for over 5-minute sessions where you can steer with your device without a game engine. They forecast the next frame based on your moves, adding weather changes and new objects that stick around.

It’s like playing a video game that AI dreams up on the fly.

Dreamer 4 takes this further by training AI agents inside video worlds without real-world data, learning how objects behave just by watching unlabeled videos. It performs complex tasks like collecting diamonds in Minecraft in real-time on a single GPU, though it still struggles with long-term memory and inventory tracking.

Global сompetition

The video race isn’t just a U.S. show anymore. Chinese labs like Tencent (HunyuanVideo) and Kuaishou (Kling 2.1) are combining open-source technologies with user-ready products, heating the competition. HunyuanVideo (13B) beats Runway Gen-3 in quality, while Kling 2.1 adds 1080p. Meanwhile, OpenAI’s Sora 2 brings synced audio, physics, face cameos, and can even “solve” text quizzes visually.

So far, OpenAI remains the leader in simulation depth, but China is building an ecosystem around mass adoption, trying to overtake the USA in efficiency. This raises another question of world domination (I know it sounds dramatic, but we are literally at this point.)

I think you’ve noticed that the simulation is a new stage, rather than a mirror.

AI-Sovereignty and the US-China Race

The US holds a massive edge in AI supercomputer capacity. We talk about 850,000 H100-equivalents (75% of global share) vs China’s 110,000, with performance 9 times ahead.

Of course, Trump found his way into the AI race as well. In July 2025, the administration unveiled “America’s Grand AI Strategy” with a $500 billion Stargate program. Under the “American AI Exports”, the U.S. began bundling its hardware, models, and cloud services into a geopolitical package for allies.

It echoed a bit of the Marshall Plan.

At the core of this fortress sits NVIDIA. The company controls about 90% of research mentions and 75% of data-center GPU sales. Its Hopper chips (H100/H200) have become the backbone of every major AI lab.

This system powers rapid innovation (but also creates policy blind spots). America’s AI leadership essentially depends on a single company’s supply chain. If NVIDIA stumbles, the entire ecosystem shakes.

In turn, China, hit by US export bans on NVIDIA chips, has doubled down on open-source models and state-led investment. Instead of chasing NVIDIA’s pace, Beijing is assembling an “Open Silk Road” of tools, weights, and communities that appeal to nations wary of U.S. control (oh, politics), a strategy resembling Android’s rise against Apple’s closed system.

Sovereign AI Investments

The U.S. SANDBOX Act and Trump’s rejection of global AI oversight have supercharged deregulation at home. And, obviously, other nations in the EU, UK, and Japan noticed that things got hot and doubled down on Sovereign AI efforts. Meanwhile, massive U.S. private capital is still locking in top talent. What sovereignty-washing indeed.

What is happening in Europe

Europe’s AI Act rolls out through 2027 with bans on mass surveillance. Companies can adopt voluntary Codes of Practice with transparency, copyright, safety, or create their own compliance frameworks. But for now, only 3 of 27 member states have designated oversight authorities, and technical standards are still “in development”.

Amazon, Anthropic, OpenAI, Microsoft, and Google signed all Code chapters. xAI signed only “Safety and Security,” skipping transparency and copyright (classic Elon). Meta flat-out refused, calling the Code “legally uncertain”. It seems like Meta isn’t really interested in Brussels’ bureaucracy.

Yes, France launched Mistral, Europe’s first AI reasoning model, but let’s speak turkey, Europe has produced zero tech companies above $400B in the last 50 years (the US has seven at $1T+). And even after launching a €200B+ InvestAI fund, Europe has implemented only 11% of Draghi’s €800B/year “European Competitiveness Plan.”

Poor Europe’s facing a tough dilemma: regulate to protect citizens or deregulate to compete. While the US and China pour trillions into infrastructure, Brussels is still stuck arguing over paperwork. Yes, technology needs regulation, as we’ve mentioned, but speed matters just as much in making those decisions.

History doesn’t wait for those still writing the rules, and by the time Europe sorts out its rulebook, the race might already be dusted.

Agents Uprising

Well, we definitely can’t hide from the word “AI-Agents” anymore. Although Andrej Karpathy called current agents “drafts” (who are we to argue?), they’re already part of AI life.

The report explains that AI systems are shifting from stateless APIs to stateful, agentic architectures. These models can remember context, call tools, and coordinate workflows. Most current agents handle repetitive tasks like filling forms, calling APIs, and writing short code. The authors describe the shift with the arrival of real-world agents like DeepMind’s Co-Scientist, which identifies validated drug candidates, and Google’s AMIE, which outperforms physicians in diagnosis.

Agents turn over a new leaf: instead of serving single prompts, AI now acts autonomously across steps and tools.

They remember everything…be careful what you feed them.

Energy is the New Currency

This one is sad, actually. There’s not enough electricity or GPUs. Models are growing larger, and data centers are getting hotter. By 2030, top supercomputers may need 2 million chips, $200B, and 9 GW of power, roughly the same as several large nuclear stations combined. China currently leads in infrastructure, adding 427.7 GW of capacity in 2024 (compared to 41.1 GW in the U.S.) and investing $84.7 billion in transmission.

For context: Bitcoin burns 175.9 TWh/year, still edging out AI. It is said AI could surpass it by the end of 2025…unless the industry actually commits to optimization over scaling.

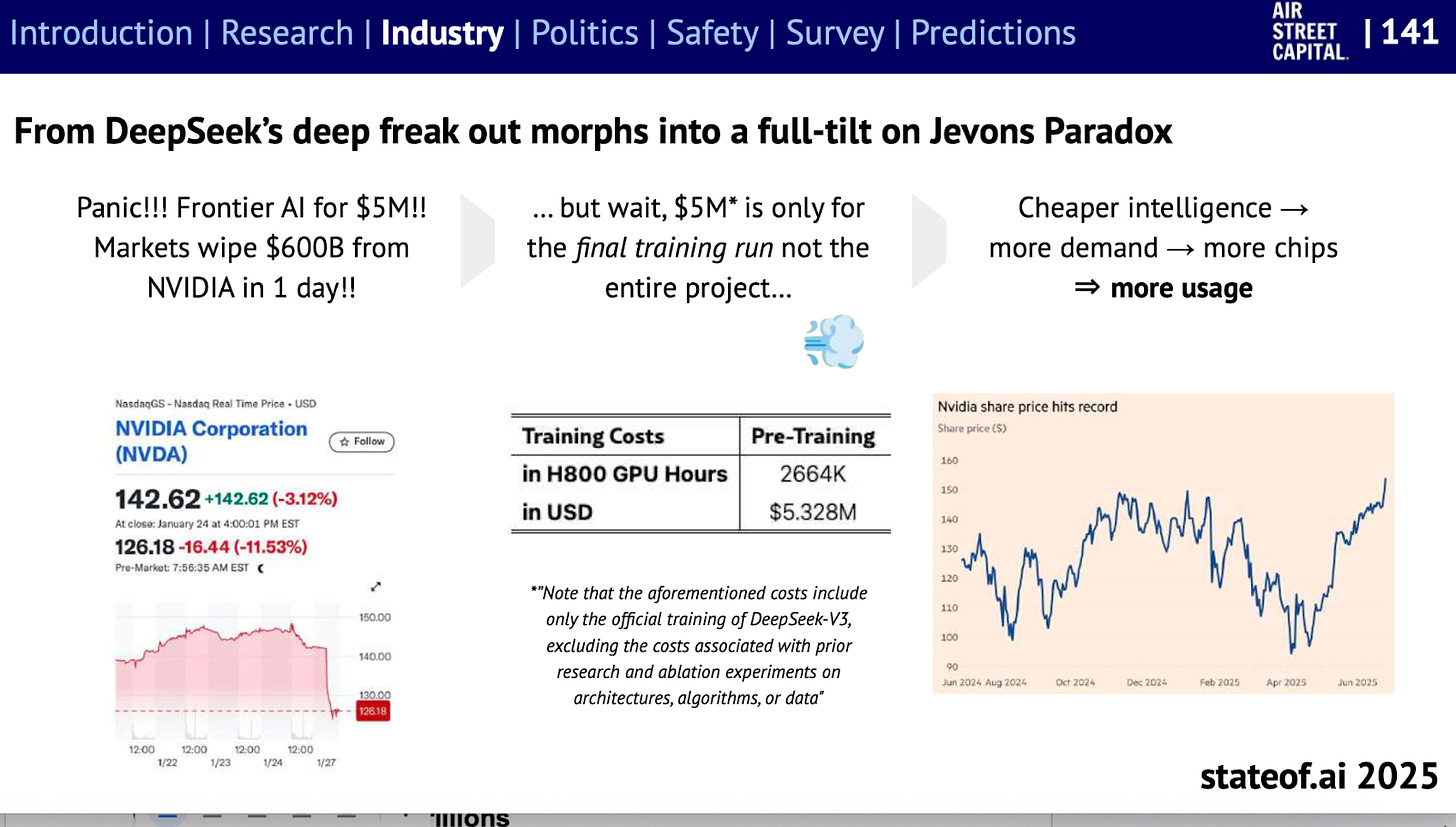

At the same time, compute requirements double every five months, while GPU prices creep up slowly. Smaller models can now compete with larger systems at significantly lower costs and reshape investment strategies. It creates what economists call a “Jevons paradox.” This imbalance makes AI increasingly accessible.

Power (not chips) might determine who wins the AI race.

From Lab to Life: AI in the Real World

The report surveyed 1,200 AI practitioners from the US, UK, and EU (subscribers to Nathan’s mailing list). You can judge how representative this sample is, especially given the geographic concentration, but it shows a snapshot. The gap between cutting-edge research and commercial reality is shrinking fast.