OpenAI o1 Review: It's Not So Perfect?

Discussing o1 from the perspective of creators and entrepreneurs

Hi!

So, last week, OpenAI finally let some users try their new model. Traditionally, some users were delighted (or even called o1-preview “that GPT-5”), while others were disappointed by significant limitations.

Today, let's discuss the new OpenAI model, its advantages and disadvantages, and run relevant tests for creators and entrepreneurs. Ultimately, we'll try to answer whether OpenAI succeeded in creating the perfect model.

In this edition:

Opportunities and limitations of o1-preview

The technical performance of the new OpenAI model

Testing o1-preview from a founder's and creator's perspective

Tips & Tricks for working with o1

Great Opportunities & Limitations

What is OpenAI's o1-preview?

It is a new model developed under the code name “Strawberry.” (Technically, it is a family of models because there is also o1-mini, but in our case, it is not so important.) Its distinguishing feature is a tendency to reason and, in a sense, self-reflect. It takes more time to think and give the answers.

This makes o1-preview noticeably slower than GPT-4 but allows it to reason about complex tasks and solve complex problems better than its predecessor. Therefore, it is primarily focused on solving not primitive queries but working in science, coding, math, and other complex domains.

Here is how its developers evaluate o1:

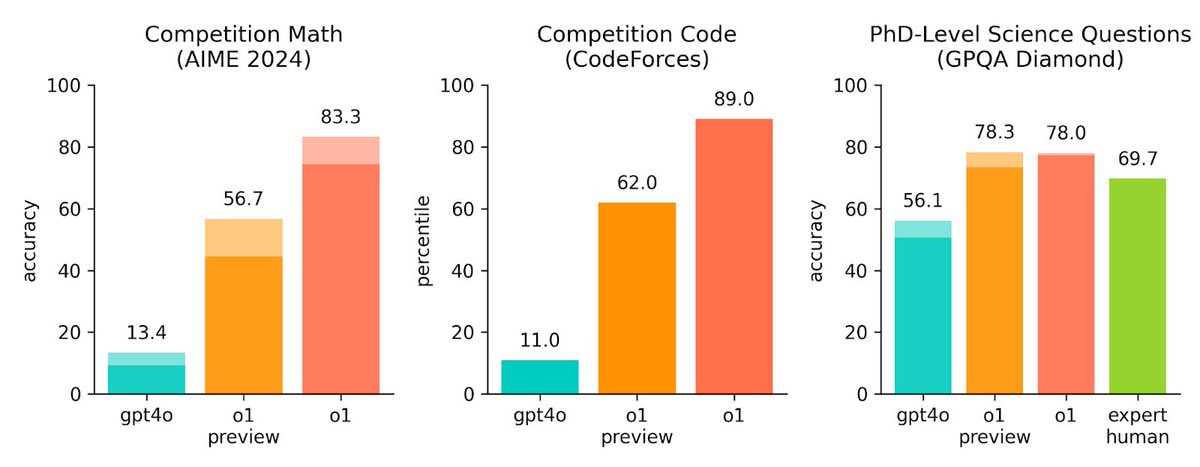

In our tests, the next model update performs similarly to PhD students on challenging benchmark tasks in physics, chemistry, and biology. We also found that it excels in math and coding. In a qualifying exam for the International Mathematics Olympiad (IMO), GPT-4o correctly solved only 13% of problems, while the reasoning model scored 83%.

According to OpenAI's research lead, Jerry Tworek, o1 has been trained using a completely new optimization algorithm and a new training dataset tailored explicitly for it. The model processes requests using a “chain of thought,” similar to how people process problems by going through them step by step.

OpenAI also says that this o1 is a new level of AI capability from which the company now counts its progress (that's why it's called “o1”, by the way).

Keep your mailbox updated with practical knowledge & key news from the AI industry!

According to tests conducted by OpenAI, o1 ranks in the 89th percentile on Competitive Programming (Codeforces), ranks among the top 500 students in the U.S. Mathematics Olympiad (AIME) qualifying round, and surpasses human Ph.D. level accuracy on the Physics, Biology, and Chemistry (GPQA) problem test.

The o1 also significantly outperforms the GPT-4o on various benchmarks, including the 54/57 MMLU subcategory. The image above shows that we are talking about a multiple advantage.

Sharing is caring! Refer someone who started a learning Journey in AI!

As for the limitations, they are quite serious.

For starters, o1 does not support file handling. Unlike GPT -4, you can't provide image models or documents. Online search doesn't work either, so you have to pay special attention to the accuracy of the quick response to determine if the information is from the latest sources. There are also some limitations on the API. Developers must be at level 5 to gain access.

Another factor that makes you consider whether o1-preview is worth working with is its cost. The o1-preview API costs $15 for 1M input tokens or text snippets analyzed by the model and $60 for 1M output tokens. By comparison, its predecessor, GPT-4o, costs $5 for 1M input tokens and $15 for 1M output tokens.

After that, perhaps the most infuriating limitation is the number of messages that can be sent. The standard o1 model only supports 30 messages per week. You'll have to count the number of them yourself and plan your session well.

To be fair: there are no message limits in the API.

So that you don't have to spend too much time counting every post, we've done some tests and are sharing the results. In the processes, we had several goals: to find out whether o1 is suitable for non-scientists, how good it is in application tasks, and, in the end, whether it makes sense to overpay or you should stop at GPT-4o.

Testing OpenAI's o1-preview

First, we have to define from which positions we will test o1. OpenAI claims that, among other things, the new model helps solve problems in science, including biology, physics, and many others. Unfortunately (or fortunately), we are not scientists, so we will test o1 in more applied problems, for example, on behalf of an entrepreneur/creator with several issues to solve.

During testing, we will be comparing o1 to GPT-4o.