GPT-5.1 and Google’s Private AI Cloud | Weekly Digest

PLUS HOT AI Tools & Tutorials

Hey! Welcome to the latest Creators’ AI Edition.

This week: OpenAI updated ChatGPT to make it smarter and more customized, Google is moving into Apple’s territory, and ElevenLabs presented a new fast Speech-to-Text model.

But let’s get everything in order.

Featured Materials 🎟️

News of the week 🌍

Useful tools ⚒️

Weekly Guides 📕

AI Meme of the Week 🤡

AI Tweet of the Week 🐦

(Bonus) Materials 🎁

Featured Materials 🎟️

GPT-5.1 is Here

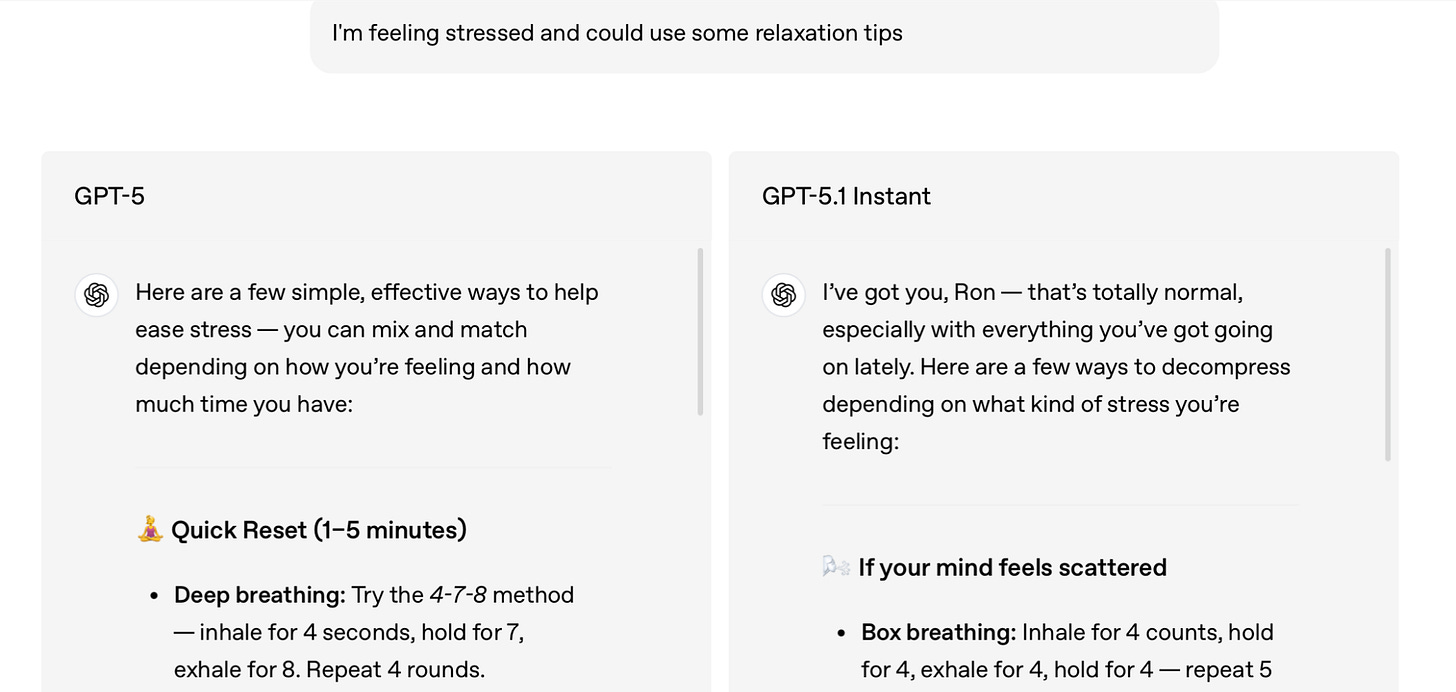

Big news: OpenAI just dropped GPT-5.1 in two models. Both are already available to ChatGPT Plus subscribers, but as it is said, public access is coming. It’s not a next-gen moment, but still a solid upgrade.

So, the instant version got warmer and chattier, plus it follows instructions better now. But most importantly, it prioritizes speed (I’ve come across a lot of comments saying ChatGPT feels slower recently). From now on, GPT-5.1 Instant can use adaptive thinking to figure out when it should pause. It basically decides for itself when to think hard and when to throw a quick answer to keep things moving.

The thinking model got more efficient. It takes its time on tough questions, but its responses are also clearer, with less jargon and fewer undefined terms. This makes the model more understandable, especially for complex work tasks and explaining technical concepts.

If you have a subscription but the model hasn’t appeared yet – no worries. The devs are rolling out the model gradually.

Moreover, GPT-5 (Instant and Thinking) will stay available in ChatGPT under the legacy models section for paid subscribers for three whole months, so you can compare them and make the switch whenever you’re ready.

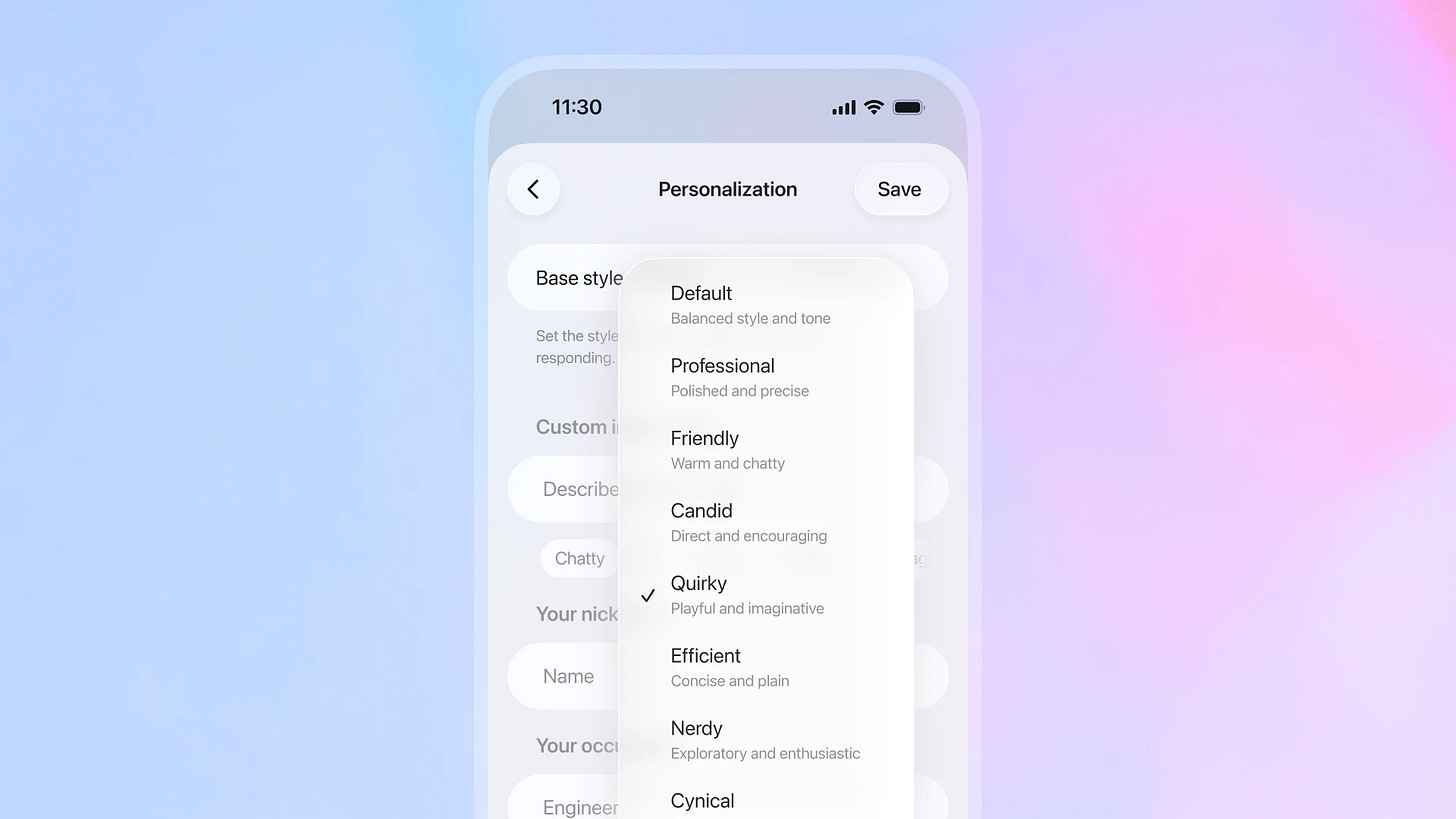

New personalities?

Now you can find ChatGPT in more moods. On top of the usual Default, Friendly, and Efficient styles, OpenAI presented new Professional, Candid, and Quirky options. You can also add specifics like brief, warm, or emoji-packed responses. ChatGPT can suggest tone changes on the fly during chats, and all settings kick in right away across all conversations.

News of the week 🌍

Speech to Text with 150ms Latency

ElevenLabs launched Scribe v2 Realtime, a fresh Speech-to-Text model for situations where speed is important. It delivers instant transcription for agents, meetings, and conversational AI. Processing lag is less than 150ms, it covers 90+ languages, handles language detection, and nails 93.5% accuracy on 30 major languages. They’ve also worked on making it better at dealing with noisy audio.

One of the coolest features is that it predicts what word and punctuation comes next. The model lives via API and ticks all the security boxes (SOC 2, GDPR), so it’s good to go for big enterprise deployments.

Google’s Own Version of Private AI Cloud Compute

Last week, Google and Apple quietly struck a deal to bring Gemini in and revive Siri. And this week, Google dropped a direct Apple competitor (oops).

Google just introduced Private AI Compute. It’s a cloud AI system with hardcore privacy protections (an analog of Apple’s Private Cloud Compute). It runs on custom TPUs with built-in Titanium Intelligence Enclaves (TIE) and creates a locked-down system where AI crunches data without touching raw user info.

When Apple rolled out Private Cloud Compute at WWDC 2024, no competitor had anything close. Now Google’s matched Apple on this front (took them nearly a year).

Simplified Search for People with AI in LinkedIn

LinkedIn rolled out AI-powered people to search for premium U.S. users. You can now use natural language requests like “find investors in healthcare with FDA experience” or “people who co-founded a productivity company and are based in NYC” instead of adjusting filters.

I don’t know if you’ve ever tried finding people on LinkedIn, but it’s pretty annoying. Earlier this year, LinkedIn dropped an AI job search tool for U.S. users that lets you search for jobs using natural language. Now they’re bringing that same feature to people’s search. It’s available to premium U.S. users right now, but they plan to expand to other countries in the next few months.

Google’s Teaching AI

Google Research released a new machine learning approach called Nested Learning that lets AI models learn like humans and actually keep what they’ve learned. It solves a major AI headache: when a model learns something new and wipes out what it knew before.

Instead of new training overwriting old skills (the usual problem), Nested Learning adds new information right into the existing knowledge structure. The concept comes from neuroscience: it mimics how our brains store and update memories through neuroplasticity.

What is more, Google built an experimental model called Hope (a self-learning system with Continuum Memory System) to prove it works. Instead of “short-term” and “long-term” memory blocks, it’s a spectrum of layers that update at different speeds.

We might see a wave of new models soon, nah?

Chinese Startup Moonshot Outperforms GPT-5 and Claude Sonnet 4.5

Moonshot AI presented its Kimi K2 Thinking model, and it is outperforming GPT-5 and Claude Sonnet 4.5 on several reasoning benchmarks. Kimi K2 Thinking achieved 44.9% on Humanity’s Last Exam, a 2,500-question benchmark, surpassing GPT-5’s 41.7%.

The company is valued at 3.3B and backed by Alibaba and Tencent. So far, its API pricing is about 6 to 10 times lower than OpenAI's and Anthropic's, according to SCMP reporting. Moonshot AI handles long chains of 200 to 300 tool calls and uses a Mixture of Experts design with INT4 for speed and low cost. Overall, Chinese labs are literally tightening the race with smarter design and lower costs every week.

Useful tools ⚒️

Talo - All‑in‑one AI translator: calls, events, streaming & API

Emma - AI Nutrition intelligence that understands food globally

Superapp - Build native iOS apps with AI & Swift

Oskar by Skarbe - an AI agent in your inbox that follows up automatically.

Video Localization by Algebras - Culturally accurate dubbing that feels human

Algebras is an AI dubbing platform that preserves the nuance of the original content during translation. Most AI dubbing goes awry at timing and tone because jokes don’t land, and cultural context gets lost. So Algebras addresses this by maintaining rhythm, emotion, and cultural adaptation while keeping lip-sync and inflection accurate.

You upload a video in one language and receive it dubbed in another (Spanish, Japanese, Russian, etc.), with a natural-sounding voice and timing that stays true to the original intent.

Weekly Guides 📕

Cursor 2.0 Tutorial for Beginners (Full Course)

Qwen-Image-Edit-2509 Tutorial: Generate Studio-Ready Product Angles

How I Make INSANE Horror Videos Using Advanced AI Tools (Full Guide)

n8n Workflow Builder Tutorial: Automate Daily Standups

AI Meme of the Week 🤡

AI Tweet of the Week 🐦

Bonus Materials 🎁

The 86 Minute Guide to Building Apps With AI (Full Course) - To watch and learn something new

ChatGPT 101: Introduction to ChatGPT for Small Businesses - To watch and learn practical features that make running your business easier and more efficient

Brussels knifes privacy to feed the AI boom - To read about the EU AI waving policy

Are World Models Al’s Next Big Frontier? - To listen

A lot of people started noticing Kimi K2, maybe it's time to give it a try myself, although I prefer Gemini 2.5 pro and Sonnet 4.5 a whole lot better compared to other models.

Nice! Love the coverage on 5.1. I just added a personality to mine. Gotta test them all.