12 Days of OpenAI & Gemini 2.0 | Weekly Edition

PLUS HOT AI Tools & Tutorials

Hello and welcome to our weekly roundup!

This week, OpenAI continued to inundate us with its updates as part of the 12 Days of OpenAI. But it wasn't alone. Google suddenly also shared some incredible products!

Let's talk about it.

Lately, one of the most debated topics has been the new ChatGPT Pro subscription. Whether it is worth your $200/mo, we found out in this post:

This Creators’ AI Edition:

Featured Materials 🎟️

News of the week 🌍

Useful tools ⚒️

Weekly Guides 📕

AI Meme of the Week 🤡

AI Tweet of the Week 🐦

(Bonus) Materials 🎁

An online all-in-one AI voice generator for everyone

Fineshare is an online all-in-one AI voice generator for everyone, and a platform that offers the best AI voices for everyone's need. With advanced AI cloning technology, you can create exclusive lifelike AI voices in one minute, and use the voices in text to speech, AI voice changer, AI cover, and real-time voice changing, making your AI voice creation never easier.

Featured Material 🎟️

12 Days of OpenAI Continues

So, last week we started by discussing the first day of OpenAI. Then, the company told us how the event would be held and introduced a new ChatGPT Pro subscription for $200/mo. A week has passed since then, meaning we have a whole set of news.

To avoid confusion, let's discuss them one by one.

Day 2 | Reinforcement Fine-Tuning

The second day turned out to be less exciting for ordinary users. The company introduced Reinforcement Fine-Tuning (RFT). This new technique allows developers to create highly specialized AI models for complex, domain-specific tasks. One of its most significant advantages is that it requires only a small number of examples—dozens, rather than the thousands typically needed for traditional fine-tuning.

RFT is available through an alpha program, with a public release planned for early 2025. OpenAI also accepts applications for its Research Program, particularly from research institutes, universities, and enterprises.

Day 3 | Sora Is Finally Here

The highly anticipated text-to-video AI tool, Sora, was unveiled. We still have a lot of testing to do before we can fully appreciate its capabilities, but we can already say that it is one of the best video generators on the market.

And we also covered everything you need to know about Sora in this post:

Day 4 | Canvas for All Users

The company has launched ChatGPT Canvas, a new collaborative interface for writing and coding, now available to all users. It enables real-time interaction with the AI in a side-by-side workspace, facilitating collaborative editing, inline suggestions, and the ability to run Python code directly within the interface.

ChatGPT Canvas is accessible to Free, Plus, and Pro ChatGPT users through the GPT-4 model. It aims to give users more control and flexibility than traditional chat formats. OpenAI plans to introduce additional features as Canvas continues in its early beta phase.

Keep your mailbox updated with key knowledge & news from the AI industry

Day 5 | ChatGPT & Apple Intelligence

Apple has integrated ChatGPT into its ecosystem with the release of iOS 18.2. This update enhances Siri's functionality by allowing it to use OpenAI's language model for more detailed responses. Users can now ask complex questions, and Siri will determine if ChatGPT can provide a better answer, with user permission required for access.

The update also introduces features under Apple Intelligence, including enhanced writing tools and visual intelligence capabilities.

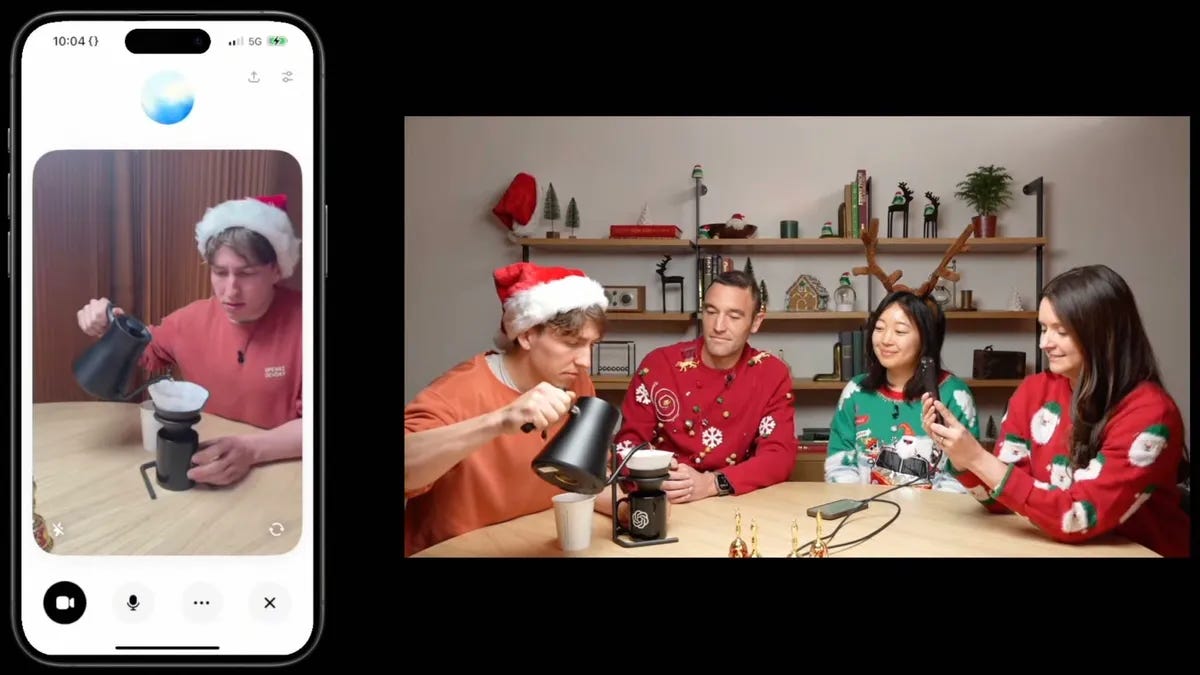

Day 6 | Advanced Voice with Vision

On Day 6, OpenAI launched Advanced Voice with Vision. It allows users to interact with ChatGPT using voice input, images, and video. Currently, Advanced Voice with Vision is available to ChatGPT Plus and Pro subscribers. Users can utilize a microphone for voice input, upload images via a camera icon, and engage with a 'Chat with Santa' option for seasonal interaction.

The feature enables users to ask questions about images and receive spoken responses. While it is being rolled out globally, the 'Chat with Santa' option will be accessible to all users, including those who have reached their free chat limit.

The first half of “12 Days” is behind us, and we can already say that OpenAI did not disappoint. But it seems that the company has saved something even bigger for last. What can it be?

News Of The Week 🌍

Google AI Updates

Google announced the release of Gemini 2.0 Flash and a slew of related updates. The new model can generate images and audio, is faster and cheaper to run, and is designed to make AI agents more accessible. The Flash 2.0 experimental release is already available through the Gemini API and Google's AI developer platforms, AI Studio and Vertex AI.

However, audio and image generation capabilities are only launched for “early access partners” before a broad rollout in January.

Here's what we know about Gemini 2.0:

Agentic Capabilities: Gemini 2.0 is designed to exhibit "agentic" behavior, allowing it to understand its environment, engage in multi-step reasoning, and perform actions based on user input.

Multimodal Functionality: The model supports integrated processing of text, images, audio, and video, enabling more versatile interactions.

Improved Reasoning: Enhancements include better performance in reasoning tasks, advanced mathematical problem-solving, and code generation.

Developer Tools: The introduction of the Multimodal Live API lets developers to stream audio and video inputs in real-time while utilizing combined tools.

Updated Version of Project Astra

More details about one of Google's most promising projects. The company has revealed an updated version of Project Astra. It's an AI assistant that uses new ways to navigate the physical world. Astra uses either an Android app or prototype glasses to record the world as a person sees it. Then AI summarizes what it sees and answers a wide range of questions, drawing on Google services like Search, Maps, and Lenses.

We've seen the above described before, but now the Astra prototype is powered by Gemini 2.0. This has given the assistant a longer memory, faster responses to questions, and more languages. It's also more personalized overall, remembering past conversations and individual preferences.

Unfortunately, the Astra is only available to a limited number of testers.

Google Unveils Web Navigation Assistant

The other major release from Google this week was Project Mariner. It's an experimental AI agent built on the Gemini 2.0 platform, designed to automate web-based tasks and navigate the internet on behalf of users. This Chrome extension uses AI to understand and interact with web content, including text, images, and forms.

Google claims the tool handles a wide range of tasks, including automating research, online shopping assistance, travel booking, and recipe searches and meal planning.

Gemini Gains Deep Research Feature

Google has introduced Deep Research, a new feature in its Gemini Advanced AI platform, designed to act as a personal research assistant. This tool enables users to ask complex questions, and Gemini will browse the web, analyze information, and compile a comprehensive report with key findings and source links.

Currently available in English via the Gemini Advanced, Deep Research is accessible on desktop and mobile web, with plans to roll out to the Gemini app in early 2025.

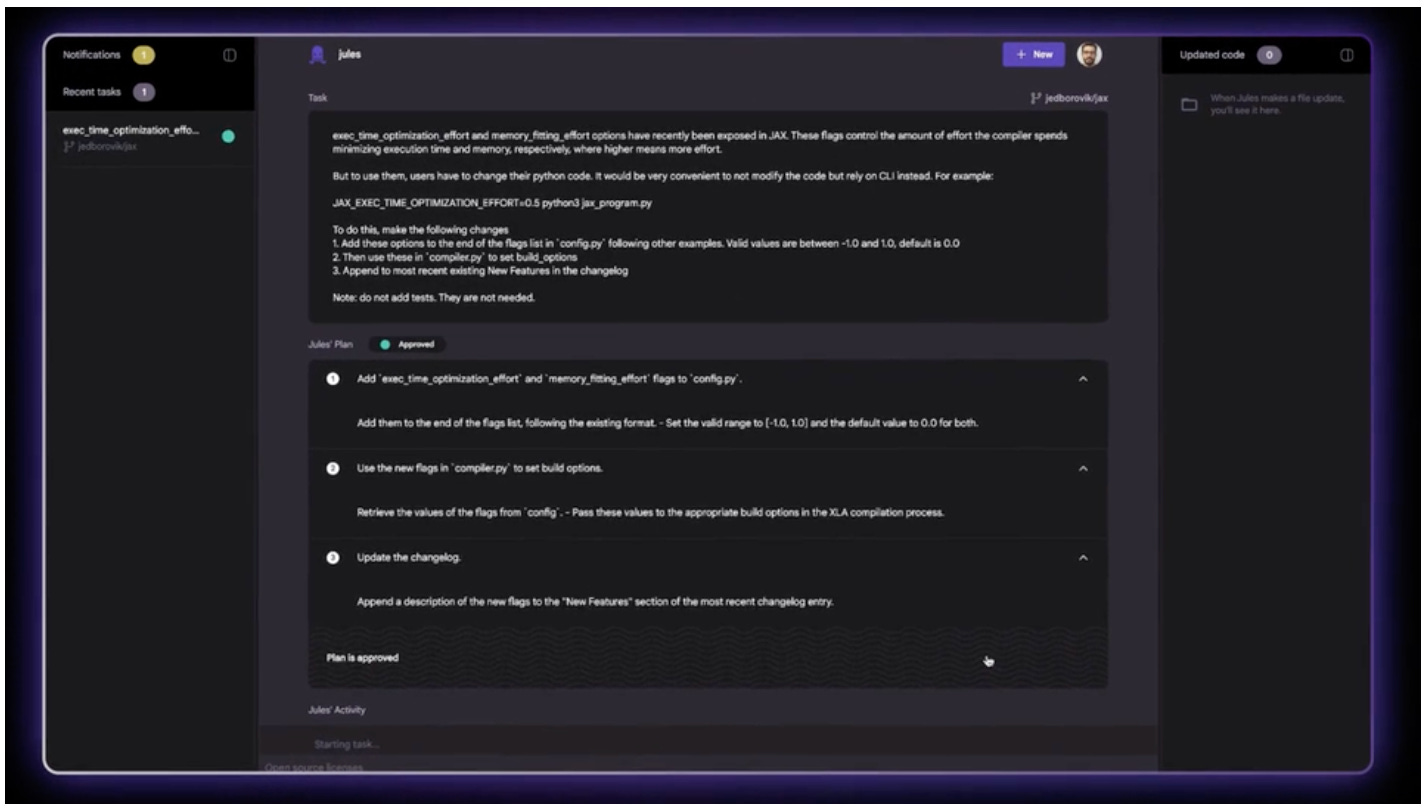

Google Presents Its AI Assistant for Coding

Google also announced Jules, an AI-powered coding assistant designed to optimize software development tasks. Built on the Gemini 2.0 platform, Jules can autonomously fix bugs, generate code changes, and prepare Python and JavaScript requests in GitHub workflows. It is expected to be released on a larger scale in early 2025.

Jules’ key features:

Bug Fixing: Analyzes complex codebases to implement fixes across multiple files.

Multi-Step Planning: Develops detailed strategies for addressing coding issues.

GitHub Integration: Works seamlessly within GitHub’s workflow system.

Real-Time Tracking: Allows developers to monitor task progress and prioritize actions.

Sharing is caring! Refer someone who recently started a learning journey in AI. Make them more productive and earn rewards!

Anthropic Launches Claude 3.5 Haiku for All Users

Anthropic has officially released Claude 3.5 Haiku, its latest AI model, making advanced AI capabilities widely accessible to both free and paid users. The model became available this week across multiple platforms, including Anthropic's Claude chatbot, web and mobile apps, and cloud services. Haiku brings improvements over previous models, matching or surpassing the performance of the earlier Claude 3 Opus model.

Claude 3.5 Haiku should be well-suited for tasks requiring quick, intelligent responses, such as customer service, e-commerce, and educational platforms.

Grok Introduces Image Generation Feature with Aurora

XAI has launched a new model-based image generation capability called Aurora. You can now create photorealistic images by inputting textual cues into Grok's interface. Aurora stands out for its ability to accurately render real-world entities, text, logos, and human portraits. It also supports multimodal input for editing images or drawing inspiration from them. To access it, you'll need a premium subscription to X.

Harvard Releases Huge AI Training Dataset

In collaboration with Google and with funding from Microsoft and OpenAI, Harvard University is about to release the largest training dataset on AI. It includes nearly a million books in the public domain. According to the project's authors, the initiative aims to advance AI by providing a diverse, high-quality, and ethically sourced resource for training natural language processing models and other applications.

The dataset will be available through the Harvard Library Public Domain Corpus.

The exact release date has yet to be discovered, but this is an important initiative. Creating quality LLMs will become noticeably easier.

Useful Tools ⚒️

iMemo – Transform Your Recordings into Searchable Text and Summaries

SmythOS – Build, debug, and deploy AI agents in minutes

AISmartCube – Build AI tools like you're playing with Legos

iBrief – Summarize Articles into Insights in Seconds

RabbitHoles AI - Chat with AI on an Infinite Canvas

RabbitHoles AI is a platform that let you to converse with AI on an infinite canvas. It creates multiple connected chats with AI models on a single canvas, each node representing a different conversation. The app supports ChatGPT, Claude, Gemini, and Grok, allowing you to bring your API keys.

Share this post with friends, especially those interested in AI stories!

Weekly Guides 📕

Gemini 2.0 is Out NOW! Full Breakdown + How to Use for Free

How to AI Clone Yourself for FREE | HeyGen Tutorial

This AI Writes Viral Videos in 10 Minutes (Poppy AI Tutorial)

AI Image to 3D Game Ready Character Model | Unreal Engine 5 (Tutorial)

Complete Guide on Using Claude AI API with Python

AI Meme Of The Week 🤡

AI Tweet Of The Week

Would you watch a movie like that?

(Bonus) Materials 🏆

The World’s Most Popular AI Marketing Tools

Smart Glasses Are Going to Work This Time, Google's Android President Tells CNET

Why the next leaps towards AGI may be “born secret”

What can Generative AI actually do well?

Vertical AI Agents Could Be 10X Bigger Than SaaS

Share this edition with your friends!