Veo 3.1 and OpenAI’s twist | Weekly digest

AI updates, News and Discoveries

Hi! Welcome to the latest Creators’ AI Edition.

Get pumped for this week’s AI drops! We have a new Veo 3.1, Anthropic’s Haiku 4.5 entered a year after its last version, and some juicy OpenAI news.

Let’s dive in!

Featured Materials 🎟️

News of the week 🌍

Useful tools ⚒️

Weekly Guides 📕

AI Meme of the Week 🤡

AI Tweet of the Week 🐦

(Bonus) Materials 🎁

Featured Materials 🎟️

Google introduces Veo 3.1

Google has just unveiled the upgraded Veo 3.1. The model goes with improved audio output, granular editing controls, and better output for image to video (October is a hot month for video models, don’t you think?)

What is new?

The main new feature is that you can now add start and end frames of the video, which was not available before.

The model can accept up to three reference images to generate a smooth scene and control objects and style.

In Veo 3.1, Google announces the ability to extend videos to 1 minute with the Scene Extension feature. But the base remains video length: 4, 6, or 8 seconds (reference image to video only supports 8 seconds).

Standard and fast versions 3.1 are attached to the whole Google ecosystem.

So it is said that Veo has enhanced realism that captures true-to-life textures.

Source: Google Cloud

Besides, Google is planning to add the removal of objects from the video. Deleting objects from photos is no longer surprising, so let’s see what this update will bring us.

The model is already available within the video editor Flow, the Gemini app, and the Vertex and Gemini APIs. By the way, since Flow launched in May, people have already made over 275 million videos with it.

Is it a breakthrough?

Twitter and Reddit are crawling with comparisons to Sora 2. Expectations have risen since its release, so Veo 3.1 feels more like a pragmatic next step in Google’s video development rather than a major overhaul.

The model can now generate synchronized audio such as dialogue, ambient sounds, and effects natively and gives creators more control over scenes. Does Veo 3.1 really surpass Sora 2? Some call it a “Sora-killer” for its rich details, but that’s (obviously) overhyped. Sora 2 has a storytelling flow, while Veo 3.1 seems to have more vivid visuals.

Users without a subscription receive 100 free credits per month, while AI Pro owners receive 1000 (3 videos per day)

Try it here: Click or on Higgsfield AI

News of the week 🌍

Anthropic’s Claude Haiku 4.5

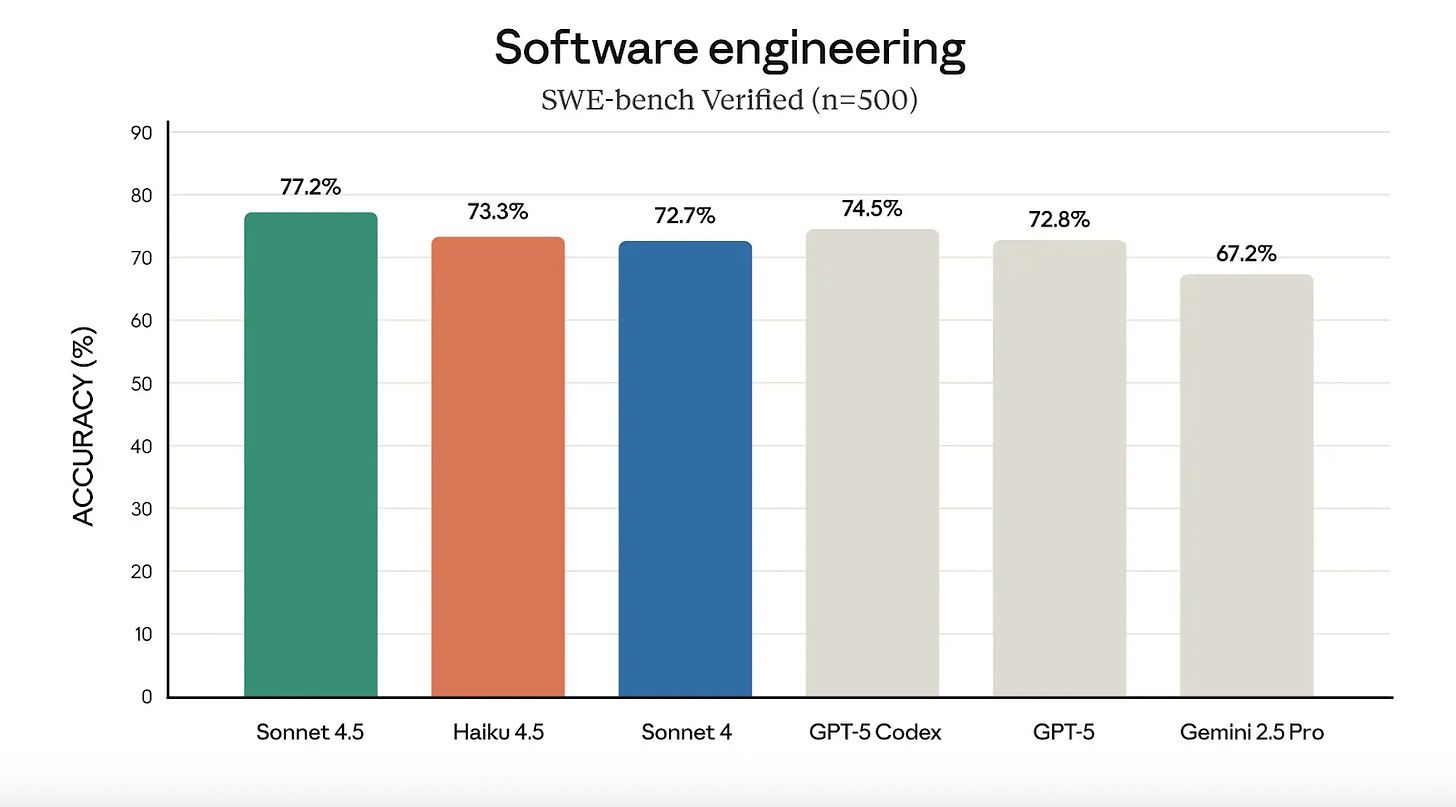

Haiku 4.5 is available and it’s 3x cheaper and 2x faster than Sonnet 4. It is also powerful for vibe-coding and computer use, making it handy for rapid prototyping and building agents fast. The new feature “extended thinking” allows Sonnet 4.5 to break down complex problems into steps and deploy a fleet of Haiku 4.5s to crush the subtasks in parallel. All in all, it is frontier-like coding quality with a low cost. However, for heavy reasoning and multi-step analysis, Sonnet 4 still wins.

Only $1/$5 for 1 million input/output tokens.

Try it here: Click

“Adult mode” in OpenAI

Sam Altman announced that an “adult mode” will be launched in the chatbot in December. The company is planning to roll out age-gating more fully and even allow more content, like erotica for verified adults. In short, ChatGPT is moving away from strict regulations: now users can customize the chatbot’s responses to make them friendlier or more ‘human-like’.

This is part of OpenAI’s principle to “treat adult users like adults,” and, at the same time, an unusual decision, given their historically strict content moderation. But apparently, this is a reaction to OpenAI responding to criticism that their strict filters made ChatGPT “less enjoyable” for users, and responding to community requests for more freedom.

Memory has been added to the Qwen

The lack of built-in memory has long been one of Qwen’s biggest gaps. Until now, users have relied on Qwen-Agent modules to add features like memory or RAG through external components.

Now, Alibaba’s Qwen team introduces Chat Memory. It is expected as a native feature that lets the model remember user-approved details across sessions, making conversations more personal and context-aware. According to the team, it’s designed to keep track of ongoing context, store important facts you ask it to remember, and bring them up naturally in future chats.

MAI-Image from Microsoft

Microsoft AI released the first MAI-Image-1 image generator, their first totally homegrown image generator. According to the company, it is best suited for creating “photorealistic” landscapes and nailing stuff like shadows, reflections, and tricky lighting.

By the way, Microsoft really invited professional designers and artists at the training stage to avoid repeating typical “AI cliches” like repetitive faces or too glamorous landscapes. It’s already climbed into the top 10 on LMArena’s leaderboard, which is a big leap for Microsoft. The model will complement Microsoft’s AI product line, which already includes the MAI-Voice-1 AI voice generator and the MAI-1-preview chatbot.

They’re planning to hook it up with Bing Image Creator and Copilot soon.

Google and Yale University’s Treatment Discovery with AI

Google and Yale University introduced C2S-Scale 27B, a foundation model built on the open-source Gemma 2 architecture. It has just found a new cancer treatment approach, confirming a significant breakthrough in oncology through experiments in living cells.

C2S AI “reads” cell data like a language, analyzing their behavior and reactions to drugs, isn’t it cool? In tests, it made tumors 50% more noticeable to the immune system if there are weak interferon signals in the environment.

The model has already been discovered on Hugging Face, and with its help, scientists can speed up drug screening by 10-100 times.

Useful tools ⚒️

Mailmodo – Email Marketing Automation Simplified With AI Agents

LambdaTest - GenAI-powered Quality Engineering Platform

n8n - Workflow Automation Tool Designed for Technical Users

Flask - Notion + Loom, for video collaboration.

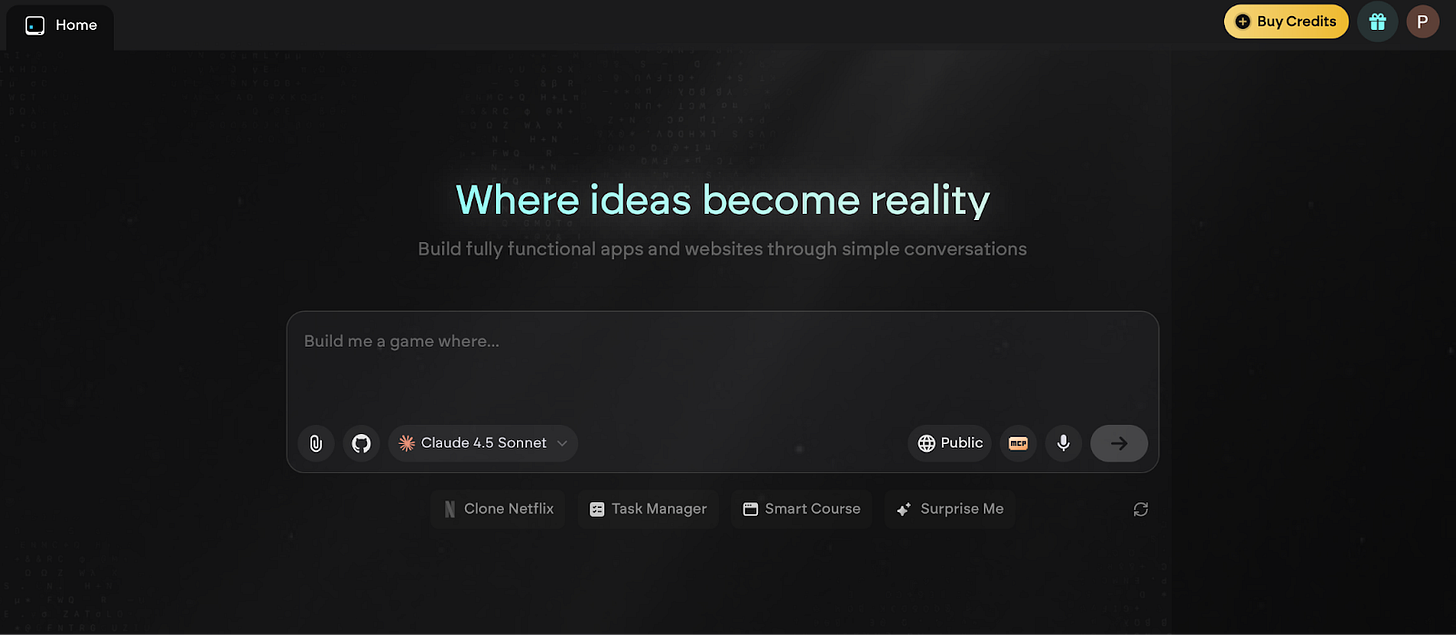

Emergent – Advanced AI app builder that ships full-stack apps

They offer to turn your ideas into real apps with just a prompt. The multi-agent system handles planning, coding, testing, and deployment to deliver bug-free apps. It’s already #1 on SWE-Bench with 1.5M+ users that have built 2M+ apps. If you wanted to delve into app creation, it might be your chance.

Weekly Guides 📕

Ultimate VEO 3.1 Update EXPLAINED: How To Use Google Veo-3 For Beginners

Wan 2.2 Animate: FREE AI Character Swap & Lip-sync | ComfyUI Tutorial

AI Meme of the Week 🤡

AI Tweet of the Week 🐦

Bonus Materials 🎁

Generative AI Full Course 2025 | Gen AI Tutorial For Beginners | Generative AI Course | Simplilearn - to watch (very long but what a discovery!)

Best Free AI Training Courses You Can Start in October 2025 - to try

Top AI Research Papers of 2025: From Chain-of-Thought Flaws to Fine-Tuned AI Agents - To read