Sora 2 Is More Than Just Memes

Image-to-Video Use Cases & Practical Applications

When Sora 2 launched a month ago, things got crazy fast.

Within hours, the Sora feed was flooded by videos of Pikachu doing ASMR, dead celebrities brought back to life, and endless memes of Sam Altman.

This post is prepared with Guest Author - Daniel Nest. Creator of Why Try AI

If you also want to write for Creators AI, send us an email here

Sure, shenanigans can be fun. In moderation. I always felt that OpenAI’s decision to package Sora 2 inside a “social app for AI slop” did a disservice to the model’s potential.

So I want to rectify things by sharing a few hands-on use cases that aren’t all “[meme] but with [person’s face].”

Let’s look at Sora’s image-to-video feature and cool practical stuff you can do with it.

Buckle up!

Signing up for and using Sora 2

Sora 2 is currently only available in the US or Canada (but you can always change the country of your App Store or turn VPN for Web version).

To learn more about signing up and finding Sora 2 invite codes, check out this article:

Note: While you can also access Sora 2 via API or third-party platforms, I’ll focus on OpenAI’s primary sora.com web option for this guide.

How to use the image-to-video feature?

In addition to basic text-to-video prompting, Sora lets you upload a reference image.

Simply click the “+” icon at the bottom-left of the prompt input field:

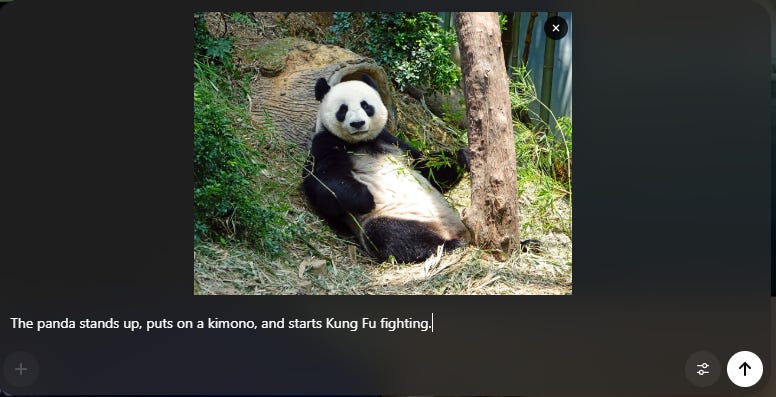

This lets you upload an image from your computer, which you can then combine with an optional text prompt to describe the action:

Sora 2 will create a video from your image+prompt combo, with occasionally mixed results:

(Yes, many kung-fu experts don’t know how to dress themselves.)

What can you do with Sora image-to-video?

This seemingly simple concept of combining a reference image with a text prompt lets you use Sora 2 for all kinds of practical applications. For instance:

First frame of a scene

This is the “vanilla” use case. You feed Sora 2 an image to use as the starting frame, then describe what should happen from that exact moment. See my panda example above.

Practical applications: Any scenario where you need full compositional control over the scene. Since your image acts as the starting frame, Sora 2 will respect the visual style and placement of all the objects and characters.

Pro tip: Try adding scene directions and annotations directly to the image, as Sora 2 can often parse those:

Here’s the first-take result as a proof of concept: