Midjourney Video, OpenAI Gate, Gemini 2.5Pro | Weekly edition

PLUS HOT AI Tools & Tutorials

Hey there! Welcome to your weekly AI round-up!

This week, Midjourney dives into video (right as Disney and Universal start throwing lawsuits), Google’s Gemini 2.5 Pro steps into the spotlight, and OpenAI’s Codex is now multitasking like a pro. The Open AI files will help you understand the company. Perplexity unlocks easy video creation via social, Higgsfield levels up image editing, and Google Search gets a real-time Gemini-powered upgrade. Meanwhile, OpenAI’s gearing up for bio-risk safety, and there’s a fresh batch of AI tools, guides, and viral moments to keep you inspired.

Let’s jump in—here’s what’s hot in AI this week!

Also, have you already read our post about how solopreneurs are using AI agents?

This Creators’ AI Edition:

Featured Materials 🎟️

News of the week 🌍

Useful tools ⚒️

Weekly Guides 📕

AI Meme of the Week 🤡

AI Tweet of the Week 🐦

(Bonus) Materials 🎁

Midjourney’s First Video Model

Midjourney has just launched its first video generation model, a web-only tool that lets users turn any image into short 5-second clips, just as Disney and Universal are taking legal action against the company over copyright issues. The new model, called V1, offers both automatic animation and manual prompts so users can describe camera moves and actions. Each project produces four clips that can be extended to 20 seconds, and the pricing is much lower than competitors. V1 works with images from both Midjourney and outside sources, and the videos keep that distinct Midjourney style fans love. CEO David Holz says this launch is only the beginning, laying the groundwork for future real-time worlds powered by image, video, and 3D models. While V1 is focused just on turning images into video and doesn’t offer audio like some other models, it stands out for its signature look and points toward where Midjourney wants to go next.

Gemini 2.5 Pro Launches for Public Release

Gemini 2.5 Pro is now officially out of preview, and Google has introduced Gemini 2.5 Flash Lite as the new go-to option for lighter, faster tasks. This new Lite model is meant to replace the old Gemini 2.0 Flash, offering similar pricing and an optional reasoning mode. The full 2.5 Flash model is a step up in quality but comes with a noticeably higher price. The lineup might feel a bit confusing, but at least 2.5 Pro is finally stable, so there’s no need to keep updating the model slug every couple of weeks.

News of the week 🌍

Google Launches Search Live with New AI Mode

Google has launched Search Live in AI Mode, a real-time, voice-powered way to search using Gemini AI—just tap the “Live” icon in the Google app (in the U.S. via Search Labs), ask out loud, and get instant spoken answers plus helpful on-screen links. It even works in the background while you’re using other apps, and you can check a transcript, follow up by speaking or typing, and revisit past conversations in your AI Mode history. Behind the scenes, it uses a custom Gemini model combined with Google’s powerful search systems and “query fan-out” to pull in broader, more diverse info. Camera-based live input is coming soon, too, so you’ll soon be able to show Google what you see and chat about it in real time.

OpenAI Launches “OpenAI Podcast”

OpenAI has launched a new “OpenAI Podcast” hosted by former engineer Andrew Mayne, and in the first episode, CEO Sam Altman shared that GPT-5 is likely to be released sometime this summer.

Introducing Open AI Files

Midas Project and the Tech Oversight Project just dropped The OpenAI Files, an interactive deep dive published June 18 that pulls together everything from lawsuits to leak-filled Slack chats to paint a picture of growing unease about how OpenAI is run. It highlights big shifts: the original capped-profit model is being loosened and may vanish by 2025, and insiders—including co-founder Ilya Sutskever—are raising red flags about Sam Altman’s leadership and decision-making. The Files also document safety team exits, complaints about rushed product rollouts, strict NDAs, and board shakeups tied to Altman’s inner circle. It’s a compelling, messy snapshot of a company wrestling with its mission, growth, and who gets to call the shots.

Higgsfield released “Higgsfield Canvas”

Higgsfield has launched Canvas, a slick new image editing tool that gives you pixel-perfect inpainting steered by natural-language prompts. You can upload your own images or choose an avatar, highlight exactly where you want edits, and seamlessly add products, tweak scenes, or swap elements—all with GPT-style precision. Early users are already impressed: it’s being praised as “a state-of-the-art model” that lets you “paint products directly onto your image with pixel‑perfect control,” ideal for mockups and e-commerce designs. The interface is simple and accessible to everyone, and with its powerful inpainting engine, Canvas looks set to take on the competition in AI-driven visual editing.

OpenAI ramps up efforts to address bioweapon risks

OpenAI has just put out a blog post detailing the safety steps they're taking as their next generation of AI models approaches a level where they could potentially help create biological weapons. The company expects future models, beyond the current o3, to hit “high risk” under their own preparedness guidelines for bio threats, so they’re stepping up mitigations like training models to reject dangerous requests, using always-on monitoring to spot suspicious activity, and running more advanced red-teaming. OpenAI is also organizing a biodefense summit in July with government researchers and NGOs to talk about risks and how to prepare. This follows similar moves from Anthropic, which just tightened up its protocols around Claude 4. As AI gets more powerful, these precautions matter more than ever, and OpenAI is making it clear that we’re headed into some seriously uncharted territory.

Codex now supports multi-response generation

Codex now lets you generate multiple responses for a single task, using a “best of N” approach that can seriously boost performance, just like you see in benchmarks where models get several tries at a problem. There’s even talk that o3-pro works by picking the best out of ten responses. This trend shows that model progress is still very much alive, since you can squeeze better results from existing systems and eventually train new models to get it right on the first try. On top of that, OpenAI has rolled out updates to ChatGPT’s Projects and Search features. You can now run Deep Research inside a project, and ChatGPT will pull in relevant info from past chats in that project, plus you can search using images, making the whole experience smarter and more connected.

Codex is now available with ChatGPT Plus subscription!

Perplexity launches Veo 3 video generation via @AskPerplexity tags

Perplexity has added new video generation features, letting users create Veo 3 videos with audio simply by tagging the @AskPerplexity account on social media, making it easy to bring AI-generated video content to your posts.

MiniMax - China’s New Reasoning Model

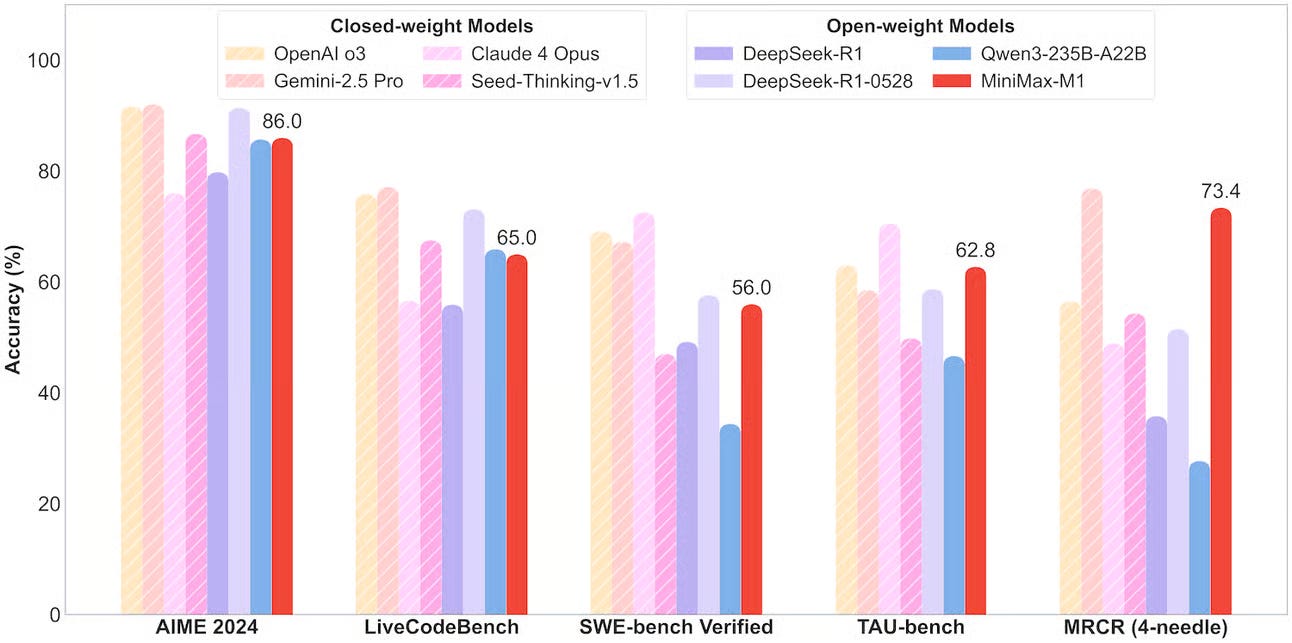

Just when it looked like DeepSeek was China’s big moment in AI, Shanghai’s MiniMax has come out swinging with M1, the world’s first open-source large-scale hybrid-attention reasoning model, and it’s turning heads even in Silicon Valley. M1 is a mixture-of-experts model with a custom Lightning Attention system and a massive 1 million token context window, matching Google’s Gemini 2.5 Pro in context length and tool use abilities. The 456-billion parameter model was trained for just $534,700 using reinforcement learning, showing you don’t need a huge Silicon Valley budget to build cutting-edge AI. M1 delivers fast, efficient reasoning, with 8 times the context of DeepSeek R1 and 70 percent less compute for deep reasoning tasks, making long-form thinking much more practical. Available in 40K and 80K token variants, the larger model outperforms the smaller one on most benchmarks and competes with models like Gemini 2.5 Pro, DeepSeek R1, and Claude 4 Opus for long context and tool use, though it does lag in factuality. M1 weights are up on Hugging Face, you can try the models free on MiniMax’s chat, and API pricing is among the most competitive out there.

AI Use in the Workplace Doubles

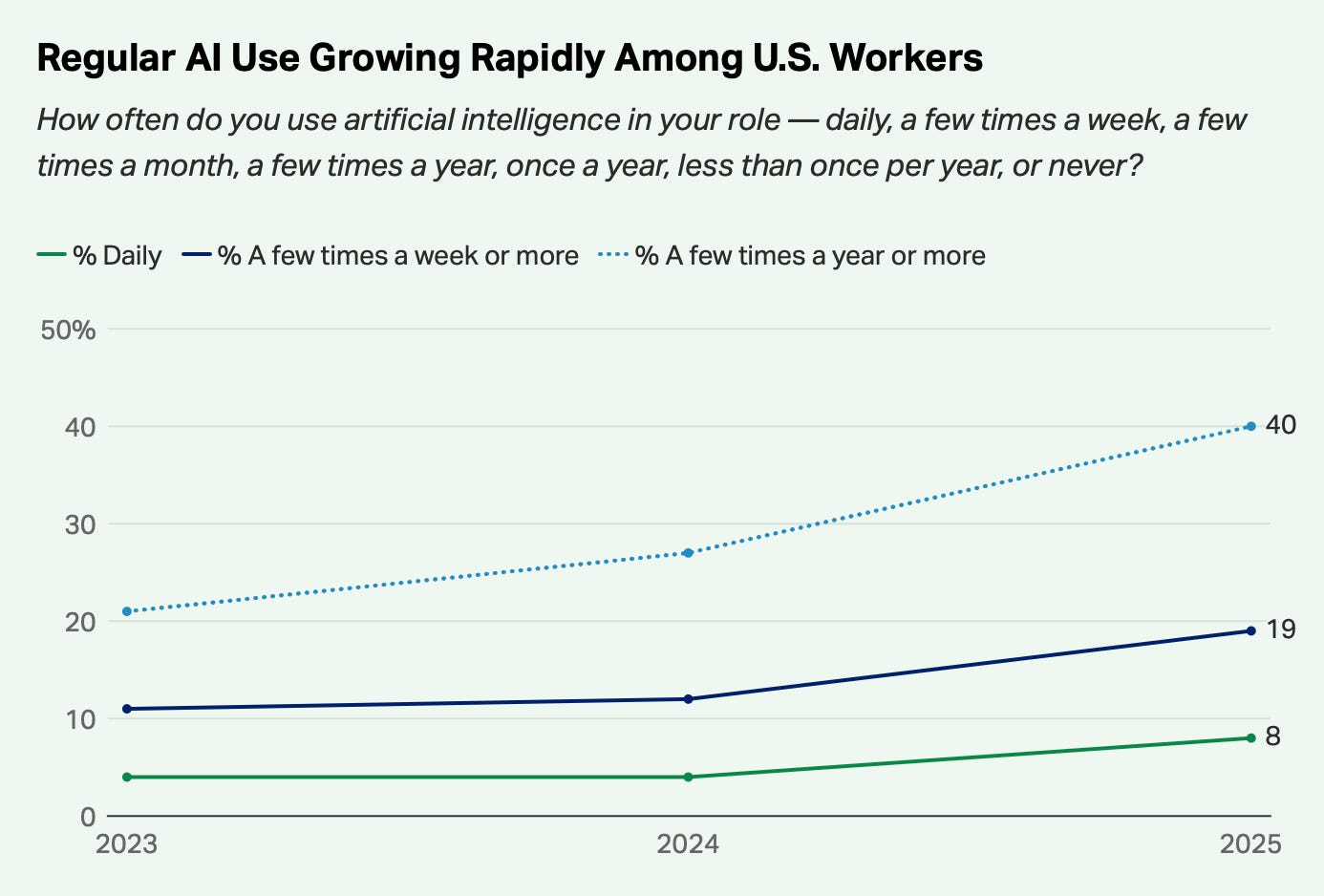

A new Gallup poll shows that the number of U.S. workers using AI at work has nearly doubled in the past two years, with 40% now saying they use it at least a few times a year. Adoption is highest among tech, professional services, and finance workers, and managers are twice as likely as staff to use AI. Still, only about one in five employees say their company has a clear AI strategy.

Useful tools ⚒️

Tila AI - Create, code, search + design AI content all in one canvas

Pulze - Create AI agents and workflows without engineers

AgentX 2.0 - Build your own cross-vendor multi-agent AI team

Second Brain -AI visual board and knowledge base

Wonderish - Canva of Vibe Coding

Wonderish helped 1,000,000+ people create no-code games. Now Wonderish are bringing that simplicity to vibe coding. Build stunning pages, apps, and experiences with zero learning curve. No code. No confusion. Wonderish is vibe prompting for everyone.

Weekly Guides 📕

Build and Deploy a Remote MCP Server to Google Cloud Run in Under 10 Minutes

How bring the best out of claude code

Agentic AI: A Self-Study Roadmap

Create AI Videos with Midjourney V1: Your Images Now Move!

AI Meme of the Week 🤡

AI Tweet of the Week 🐦

Do you prefer context engineering or prompt?

(Bonus) Materials 🎁

What workers want from AI (Stanford Study)

What Google Translate Can Tell Us About Vibecoding

A conversation with the creators of the MCP

Deep Research Agent for Large Systems Code

If you missed our previous updates, don’t worry, here they are: