Image Gen, Editing & Design Tools

Full tutorial on how to use AI with images

Hi! It's been a while since we've talked about image generators.

I think everyone who wanted to has already mastered Midjourney and DALL-E, but what about new tools? Well, there have been quite a few of them lately.

In this post, we’ll discuss some practical use cases, fresh models you need to know about, and how to utilize them (+ a bunch of prompts).

By the way, you can try them for free.

Keep your mailbox updated with practical knowledge & key news from the AI industry!

Google Dives Into Image Editing

The starting point for the post you're reading was the Gemini 2.0 update.

After several failures in the AI niche, Google got its act together and started surprising us. However, for some reason, many creators only paid attention to the reasoning models and deep research. Image Editor was left without proper attention.

That doesn't seem fair to me. A few days of tests have led me to conclude that Gemini can already replace several apps, like Photoshop and Figma.

Not in everything, but at least in basic tasks.

Let's look at why this is so with five extraordinary use cases.

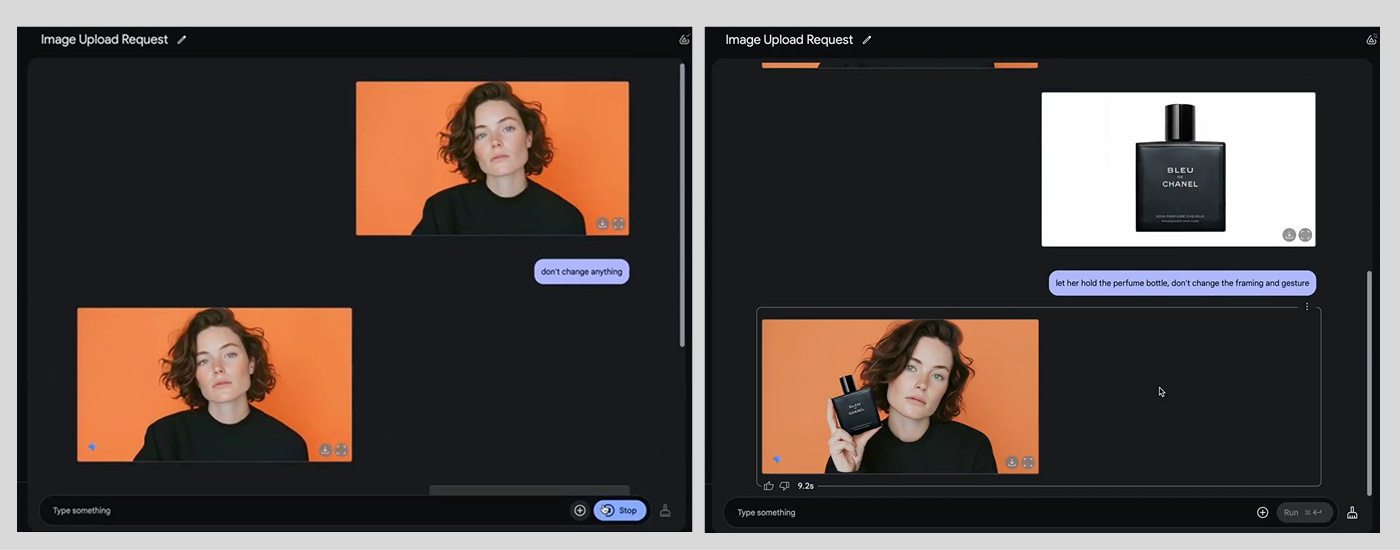

1. Object Сombination

With the Gemini 2.0 update, Google's AI has learned how to handle multiple images at once. This includes the realistic matching of different objects. So now, you don't have to take a long time to cut out a single object and use layers in Photoshop.

This can be a great tool for creators preparing commercials and promos. Even if you can't get a finished image, you'll have a ready reference for clients or yourself.

And all you have to do is ask for a chatbot.