How Context Engineering Can 10x Your AI Models Performance

Real Cases & Tutorial

You know how it works when someone needs to handle a tricky customer complaint? It doesn’t just get handed off without context. The customer’s history gets pulled up, their past emails are reviewed, the latest policy docs are shared, everything they need to actually solve the problem is put in front of them.

That's context engineering for AI, but with a twist. Instead of one conversation, you're juggling multiple data sources, keeping track of what happened before, and feeding the AI exactly what it needs without drowning it in information.

Now, this probably sounds familiar if you've been working with AI tools. But it's not what you think. You’ll get the difference after reading this post.

What is Context Engineering?

Let's start with a simple analogy. Early AI applications were like giving someone a single sticky note with instructions and expecting them to complete a complex project. This was prompt engineering, crafting the perfect set of instructions in a single text string.

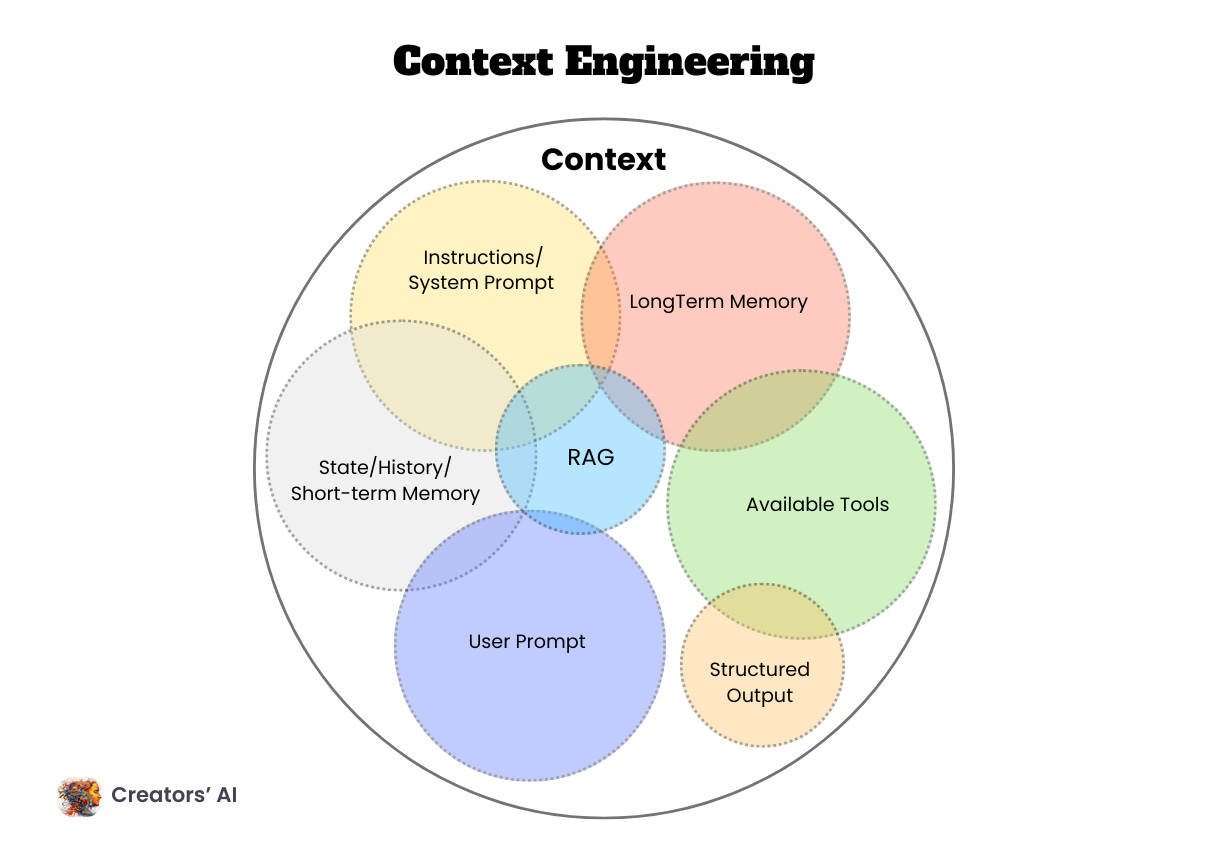

Context engineering is different. It's the discipline of designing and building dynamic systems that provides the right information and tools, in the right format, at the right time, to give an AI everything it needs to accomplish a task.

Context engineering operates on four fundamental principles:

System-Based, Not String-Based: Context isn't just a static prompt template. It's the output of a system that runs before the main AI call.

Dynamic and Adaptive: Created on the fly, tailored to the immediate task. For one request this could be calendar data, for another email or a web search.

Information + Capabilities: You need the right information and the right tools. Giving the AI the right tools is just as important as giving it the right information.

Format Matters: Just like communicating with humans, how you communicate with AIs matters. A short but descriptive error message will go a lot further than a large JSON blob.

Understanding these principles helps explain why context engineering has become essential for building reliable AI applications. But to see why this approach matters so much right now, we need to understand how it compares to other methods you've probably heard about.

Context Engineering vs. Prompt Engineering vs. RAG

Before going any further, let’s clear something up first. There's significant confusion in the AI community about these terms, and this confusion is costly. Choosing the wrong approach can lead to over-engineered solutions that waste resources, or under-engineered ones that fail in production. Understanding these distinctions helps you invest your time and budget in the right solution for your specific needs.

Many developers still think "better prompts" will solve complex AI application problems. This is like believing that writing better email subject lines will solve all your customer service issues. While prompts are important, they're just one small piece of a much larger system. Now, let's examine how these three approaches work in practice and when each one makes sense for your business.

Prompt Engineering is like writing a perfect instruction manual for a single task. You craft the exact words to get the AI to behave correctly for one specific scenario.

Example: A company wants to translate product descriptions.

Simple prompt: "Translate this product description to Spanish, maintaining a professional tone."

Works when: You have a clear, repetitive task with consistent inputs

Fails when: You need the translation to consider brand guidelines, target audience, or regional preferences

RAG is like giving someone access to a library before they answer questions. The AI retrieves relevant information from a knowledge base and uses it to provide more accurate, up-to-date responses.

Example: A company wants to answer questions about their policies and procedures.

How it works: User asks "What's our vacation policy?" → System retrieves current HR documents → AI answers based on retrieved information

Works when: You need current, factual information that the AI wasn't trained on

Fails when: The task requires multiple steps, personalization, or actions beyond just providing information

Context Engineering is like having a highly skilled assistant who knows exactly what information to gather, what tools to use, and how to present everything for maximum effectiveness. It orchestrates multiple information sources, memory systems, and capabilities.

Example: A company wants to automate customer support.

How it works: Customer message → System gathers customer history, checks inventory, reviews past interactions, identifies available actions → AI provides personalized response and takes appropriate action

Works when: You need reliable, personalized, multi-step assistance that adapts to different situations

This is the evolution path: It incorporates prompt engineering (for instructions) and RAG (for information retrieval) as components of a larger system

Now that we've covered what context engineering is, it’s time to see why it has become so important!

Why Context Engineering Matters Now

The reality is that most of the time when an agent is not performing reliably, the underlying cause is that the appropriate context, instructions and tools have not been communicated to the model.

As AI applications have evolved from simple chatbots to complex, long-running agents, we've hit significant limitations:

Context Overload: AI systems can become overwhelmed with too much information.

Missing Critical Information: Without the right context, even the most advanced AI will fail.

Poor Information Formatting: How you present information dramatically affects AI performance.

Tool Integration Issues: AI systems need not just information, but capabilities to act on it.

Most agent failures are not model failures anymore, they are context failures. When AI agents fail, it's usually because:

Information Gaps: The AI doesn't have access to crucial information needed for the task

Context Poisoning: Incorrect or hallucinated information makes it into the context

Context Confusion: Too much irrelevant information influences the response negatively

Context Conflicts: Different parts of the provided information contradict each other

As AI systems become integral to business operations, context engineering becomes the bridge between raw AI capability and practical business value. Without it, you're essentially asking a brilliant consultant to solve complex problems while blindfolded and with their hands tied.

So why should you focus on Context Engineering?

Performance gaps are widening between demo and production AI systems - AI system performance depends more on deployment context and system design than on model selection alone

Information architecture directly impacts AI reliability - The same model can perform vastly differently depending on how information is structured and presented

Integration complexity is increasing exponentially - As AI systems connect to more data sources and business processes, context management becomes the critical bottleneck

Context engineering principles show measurable improvements - RAG implementations (which apply basic context engineering concepts) demonstrate significant accuracy gains over standalone model queries in production environments

Context Engineering in Action

So far, we’ve discussed the definition of context engineering, compared it with similar concepts, and explored its core principles. Now, let’s look at some real-world examples to better understand how context engineering is applied.

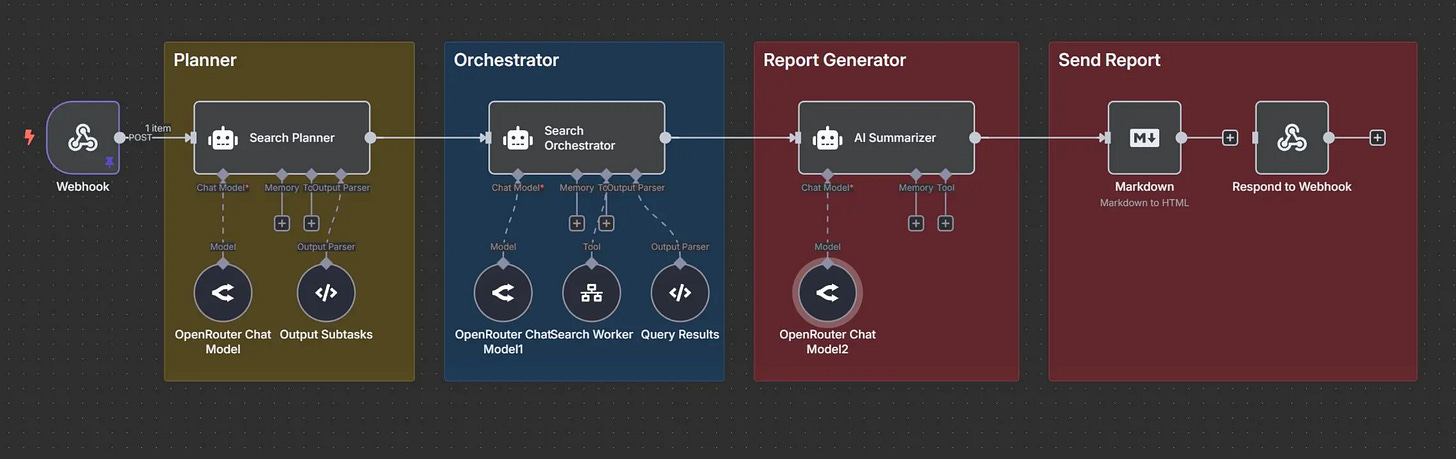

Example1: Research Planner Agent

Imagine you’re designing a multi-agent research assistant that takes a complex user query and breaks it into manageable, focused search tasks.

In this workflow, context engineering starts right from the first line you feed the system. You frame the agent with a system prompt that doesn’t just say “plan the search.” Instead, it tells the AI:

You are an expert research planner. Your assignment is to take the user’s query and split it into specific search subtasks, each with clear identifiers, query focus, source type, time relevance, domain hints, and priority.

That’s not one-size-fits-all, it’s System-Based, Not String-Based. The system is built with structure, not left to wander inside a vague instruction.

Then the agent examines the user’s real input, for example, “What’s the latest on OpenAI development news?” It uses delimiters to clearly distinguish that content, ensuring it's not lost or mixed with other instructions. From there, the agent generates two (or more) subtasks, each designed for different angles, perhaps one focuses on product updates from official blogs (source: “news”, time period: “past_week”), while another captures technical deep-dives from research archives (source: “academic”, time period: “recent”). Each subtask includes an explicit ID, priority ranking, and even programmatically inferred dates, “start_date” and “end_date” derived from “past_week”, so each piece of context is Dynamic and Adaptive, tailored precisely to the query at hand.

by feeding the AI not only the “what” but the how, with structured metadata and dynamic context, the agent knows exactly how to behave, even before it starts fetching data. It blends Information + Capabilities, because the planner isn't just thinking, the system can also later incorporate tool definitions or search API specifics into the context for execution planning.

Read the full explanation of this example here.

Example2: From Customer Complaint to Strategic Action Plan

Most professionals using ChatGPT, Claude, or similar AI assistants are stuck in a prompt loop which means they’re constantly refining their questions, hoping the next attempt will give them exactly what they need. But there's a better way.

Let’s see how context engineering transforms a frustrating AI interaction into a powerful business tool.

Imagine a customer support manager, receives this escalated complaint:

"I've been trying to cancel my subscription for 3 weeks. Your website keeps crashing when I click cancel. I've called support twice and been on hold for over an hour each time. This is ridiculous. I'm going to dispute the charges with my bank."

He needs actionable guidance fast. Like most professionals, his first instinct is to ask his AI assistant directly. So, he opens ChatGPT, for example, and types:

Analyze this customer complaint and tell me what to doChatGPT responds with generic advice: apologize, fix the website, improve wait times, process the cancellation. Technically correct, but useless for making actual business decisions. Why? Because ChatGPT has no idea who this customer is, what the manager’'s business priorities are, what resources he has available, or what actions he can actually take. It's like asking a stranger on the street for directions without telling them where you're trying to go!

This process can be improved with some adjustments, which we call the Context Engineering approach.

Instead of fighting with follow-up questions, the customer support manager builds context once and transforms every future interaction. Here's how:

Step 1: he creates his context foundation

This goes at the beginning of his ChatGPT conversation or he can create a custom GPT and add the context in the “Anything else ChatGPT should know about you?” section: