Higgsfield AI Tutorial: Style Control of your Videos

Review & Clip Creation Guide

What if you could control camera angles in an AI-generated video just like a real film director? Higgsfield made it happen. And it earned them a $1B valuation.

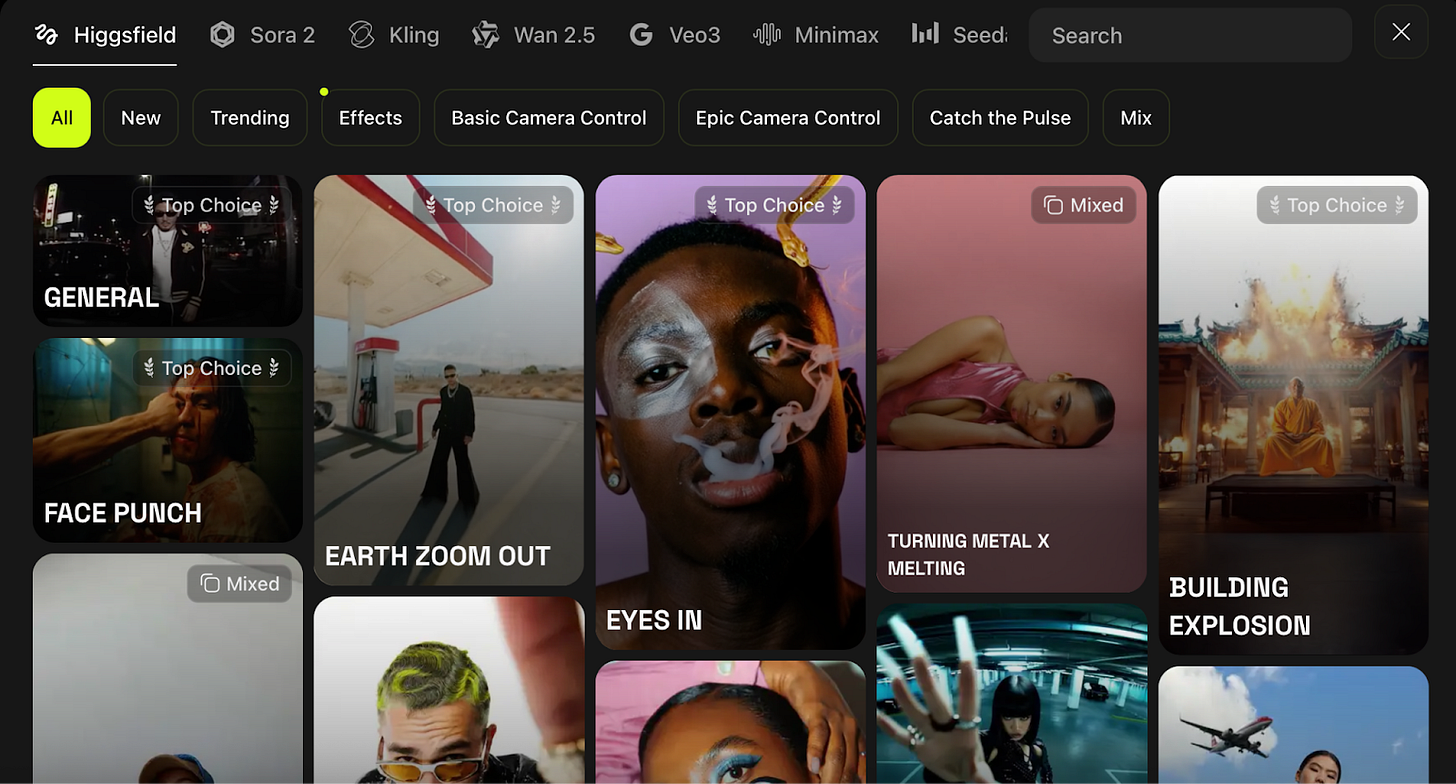

Source: higgsfield.ai

In this tutorial, we will explore the nature, features, and capabilities of Higgsfield: how you can control camera movement, animate a classic painting, and make a little experiment with a visual “DNA spiral” animation using camera motion prompts.

What is Higgsfield

The Higgsfield model can generate 3–5 second MP4 clips featuring lifelike movement, sophisticated camera dynamics, and refined visual effects. It is not surprising anymore, but I still mention that you don’t need advanced skills or expensive software to use it.

Behind the model stands the startup Higgsfield AI, founded by Alexander Mashrabov and Kazakh AI researcher Yerzat Dulat. The team successfully raised $15 million in funding to help creators and creative professionals produce cinematic and controllable videos.

The company specializes in cinematic motion control technology, with its flagship model DoP I2V-01 powering both their web studio and mobile application, Diffuse.

Okay, Features

The founders promise us some advanced techniques, so what is hidden inside?

Сamera styles library

It feels like a character customization menu for camera movement: over 50 presets, including dolly-in/out, whip pans, and crash zooms, whip-pan, FPV drone, bullet-time, and other movements. All these definitely sprinkle in some realistic camera dynamics that make short clips look professional.

Mix Feature

It lets us combine these camera movements with no post-editing needed. Choose moves like crash zoom followed by dolly-out to add motion storytelling.

Visual Style Presets

To define the mood or tone of your video, the model provides one-click filters such as VHS, Super 8mm, cinematic, or abstract.

Scene Style Transfer

You can recreate the atmosphere of known cinematic scenes with the lighting and color tone.

AI Image Creation

You can control details and style for branding and storytelling, just choose high-quality reference photos or an in-depth prompt.

Check how Higgsfield converts a meme into a movie scene — click on me

Speak Feature

Transforms text to speech and syncs it with facial movement, as if creating your avatar.

Ads Creation

Generates product ad videos from your photo. Choose between standard or turbo models and select a commercial-style template.

Limitations

Duration Cap

Videos are limited to 5 seconds maximum, it is really short and sweet.

Resolution

Currently outputs at 720p only.

Credit Expiration

Monthly credits do not roll over.

Is it really unique?

Unlike Runway, Pika Labs, and OpenAI, in Higgsfield AI, we take over the full camera control. The technology allows you to create complex camera movements from a single image and add some text queries, such as bumps, sharp zooms, overhead shots, and body.

Higgsfield AI’s Integration

Indeed, the model does not exist in isolation. What is great for your art journey is that the platform allows integration with other AI video models such as Kling, WAN, Google Veo, and Nano Banana. Especially significant was the announcement of full access to Sora 2 and Sora 2 Pro, featuring multi-scenes, precise sound synchronization, and cinematic 1080p.

More about Sora:

Kling + Higgsfield

Access: directly within the Higgsfield platform (Premium and Pro plans).

Difference from Higgsfield: Kling focuses on fluid animations and realistic physics. It produces smooth object dynamics, textures, and particle effects.

Complementarity: this combination allows Kling to build the movement inside the scene, and Higgsfield to control the movement of the camera itself.

Google Veo 3 + Higgsfield

Access: directly within the Higgsfield platform (Pro, Ultimate, and Creator plans).

Difference from Higgsfield: Veo 3 generates longer, story-driven videos (up to 30 s) with synchronized sound and natural lighting.

Complementarity: Veo 3 delivers the visual realism and sound layer, while Higgsfield can refine the perspective and transitions.

Higgsfield AI vs Pika 1.6

Access: Not on the Higgsfield platform, but has free access as well.

Difference from Higgsfield: Pika targets quick social media edits with real-time remixing.

Best for: Pika provides less expressive effects, but as well as Higgsfield does good for fast creative clips and templates. Higgsfield works better when you need that polished camera feel.

Higgsfield AI Pricing Structure

Credits are the main resource. It all comes down to how many credits you have in your plan and how many transactions you consume. I got 40 free daily credits when I signed up. The free plan lets us test the platform without payment.

Credit costs:

Images cost between 0.25 to 5 credits, depending on the model and quality settings:

Higgsfield Soul Image: 0.25 credits

GPT Image Low quality: 1 credit

GPT Image Medium quality: 2 credits

GPT Image High quality: 5 credits

Flux Kontext Max: 1.5 credits

Videos cost 20-50 credits based on duration and settings. Voice and sound generation costs 1 credit per use.

Paid Plans:

Basic Plan: $9/month

with 150 credits a month

2 concurrent generations

Pro Plan: ~$17.4-29/month (depends on the discount)

with 600 credits a month

Lip Sync studio

Priority access to features

Ultimate Plan: ~$29.4-49/month (depends on the discount)

with 1200 credits a month

All features

Сreator Plan: ~$149-249/month (depends on the discount)

with 6000 credits a month

Highest credit limit

All features + discounts on additional credits

Even in the free version, the network saves content without watermarks.

How to Use Higgsfield AI: A Step-by-Step Guide

They say Higgsfield is able to turn a still image into a cinematic shot. Let’s see how it works.