ChatGPT Will Be Your Doctor?

Benchmarks & Real Cases of AI in Healthcare

Would you trust an AI to give you a diagnosis? Or spot cancer before a human doctor could?

We’ve seen AI excel in coding, sales, and even law, but now it’s entering the operating room, the therapist’s office, and even your smartphone’s wellness tracker. I’m not sure if I’d trust an AI agent to treat me, but I’m curious: How good are these tools really? Should your doctor be worried?

Today, we explore OpenAI’s HealthBench, wild Reddit health experiments, and hands-on tools you can try on your own body (yes, really).

What is HealthBench? And Why Does it Matter?

HealthBench is an open-source benchmark created to test how well large language models (LLMs) perform in real-world healthcare situations.

Think of it like a standardized test for AI, but instead of math or reading, it evaluates medical reasoning. The benchmark includes 5,000 multi-turn conversations, each scored against over 48,000 physician-written criteria.

The goal? Measure how well AI handles tasks like emergency advice, symptom evaluation, and global health queries.

Why is HealthBench Important?

You’ve probably heard the phrase "failure is not fatal." But even the best motivational speaker wouldn’t say that to their doctor.

An error in image generation might just ruin your day; however, AI giving you the wrong health advice might end your life. This is why we need benchmarks to essentially measure how bad AI tools can be.

HealthBench addresses this by tracking high-risk errors using “worst-at-k” scores, which reveal how often models produce dangerously incorrect answers.

It was built using real medical questions and tasks from expert-reviewed public datasets like MedQA, MedMCQA, and PubMedQA. These questions cover all kinds of scenarios doctors, patients, and medical students deal with every day. With input from 262 doctors across 60 countries, the benchmark includes a wide mix of situations, making sure models are tested on real clinical reasoning, not just memorized facts.

HealthBench gives AI startups a clear way to test and improve their healthcare tools—not that there weren’t benchmarks before, but this one raises the bar for proving safety and reliability. For AI healthcare startups, that’s a big deal when pitching to hospitals, regulators, or investors.

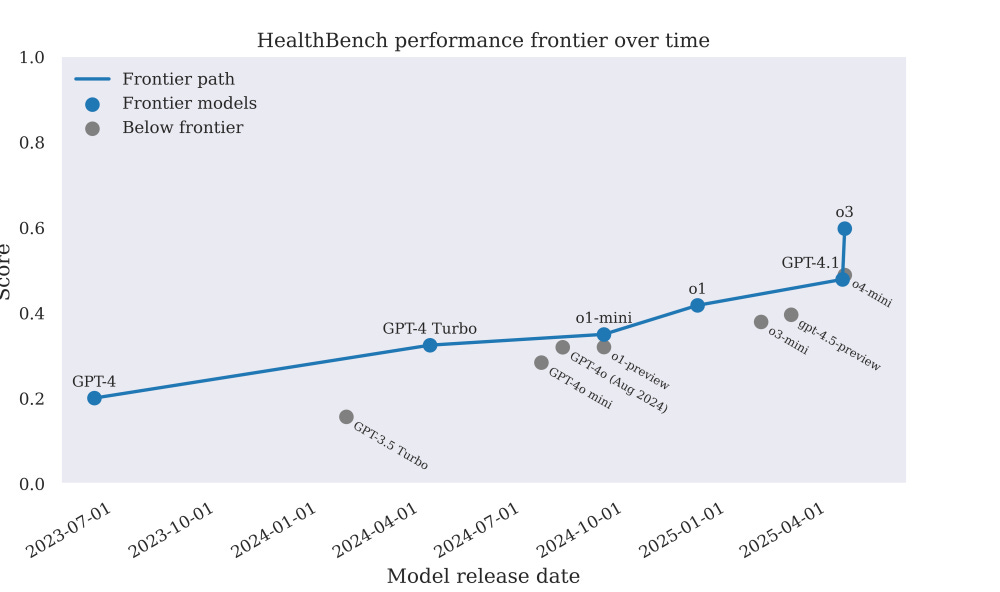

Open AI models HealthBench

Comparing the performance of OpenAI models over time using HealthBench.

Frontier AI ≠ every new model: Not all newer models are better in the medical domain. Specialized or smaller models (like “mini” or previews) often underperform compared to full models.

HealthBench by OpenAI is a power move that sets the gold standard for medical AI performance, turning clinical credibility into a leaderboard game where only frontier models matter—and startups either align fast or get buried under irrelevance.

Applicable AI Cases in HealthCare Today

You may not trust AI to give you injections (yet), but here are a few areas where specialists and consumers believe in AI (or API wrappers)